Sunday 7 April 2024

GESELLSCHAFT 5.0 UND INTERNET OF BODIES: WAS KOMMT DA AUF UNS ZU?

Wenn Informationstechnologie mit Bio-, Gen-, Neuro-, und Nanotechnologie verschmilzt, ist irgendwann nichts mehr so, wie es einmal war. Das birgt riesige Chancen und noch größere Risiken – einschließlich der Möglichkeit, das Menschsein und die Gesellschaft für immer zu verändern. Das will gut überlegt sein!

Seit einigen Jahren wird verkündet: Wir sind auf dem Weg in die Gesellschaft 5.0.[1] Nach der Jäger- und Sammlergesellschaft, der Gesellschaft 1.0, haben wir die Agrargesellschaft (die Gesellschaft 2.0) sowie die Industriegesellschaft (Gesellschaft 3.0) hinter uns gelassen. Derzeit befinden wir uns vermeintlich noch in der Informationgesellschaft (Gesellschaft 4.0). Doch obwohl die digitale Transformation uns alles abverlangt, steht uns die nächste Revolution bereits bevor! Eine Veränderung, die so riesig ist wie jene drei zuvor? Wovon ist da die Rede?

Die Gesellschaft 5.0 werde das Ergebnis der vierten Industriellen Revolution sein, so kann man lesen,[2] und diese sei gekennzeichnet durch Künstliche Intelligenz, Internet der Dinge, Blockchain Technologie und fortschrittliche Robotik. Ok, das sehen wir vielleicht noch ein. Aber dann ist da auch die Rede von Neurotechnologie und Gen-Editierung[3] – womöglich nicht nur bei Pflanzen.

Um die komplette Tragweite davon zu verstehen, muss man doch ein wenig weiter ausholen. Denn was sich da anbahnt, ist wahrhaft revolutionär. Ja, es scheint geradezu Science-Fiction zu sein. Dennoch ist es bereits viel realer, als die meisten von uns ahnen. Und es birgt jede Menge Sprengstoff für Demokratie und Grundrechte sowie jede Menge militärisches und Missbrauchs-Potenzial – auch Dual Use genannt.[4]

Im folgenden möchte ich daher die revolutionären gesellschaftlichen Potenziale und Risiken der sogenannten konvergierenden Technologien skizzieren. Dabei geht es um die Verbindung von Informationtechnologien mit Bio-, Gen-, Nano- und Neurotechnologien, sowie um das “Internet der Körper” (das “Internet of Bodies”) bzw. das Internet von Allem (das “Internet of Everything”). Was ist damit gemeint?

Beginnen wir mit dem “Internet der Dinge” (dem “Internet of Things”). Hier geht es um die Möglichkeit, allerlei Mess-Sensoren für allerlei Umweltfaktoren mit dem Internet zu verbinden (von Lärm über Temperatur und Luftverschmutzung bis CO2, Lichtintensität und Radioaktivität). Inzwischen gibt es sogar mehr von diesen Sensoren als Menschen auf unserem Planeten. Mit der technologischen Entwicklung werden sie immer billiger und immer kleiner – bis sie zum Teil die Größe von Nanoteilchen erreichen. Das sind Teilchen von bis zu 100 Nanometer Durchmesser, also kleiner als ein zehntausendstel Millimeter – vergleichbar mit der Größe eines Virus und mit menschlichen Augen nicht zu sehen.

Damit passen sie auch in unsere Zellen! Eingebettet in unseren Körper, könnte man sie also nutzen, um Daten auszulesen, die unter anderem für unsere persönlichen Gesundheit relevant sind. In der Tat spielen sie potenziell eine Rolle für „personalised health“ oder „high precision medicine“, also die angestrebte Personalisierung von medizinischen Behandlungen. Diese wären dann viel wirksamer, so hofft man, und hätten weniger Nebenwirkungen. Überdies könnte man viele Krankheiten schon im Anfangsstadium erkennen – und behandeln – bevor sie ausgebrochen sind und schweren Schaden angerichtet haben. Schon träumen manche vom ewigen Leben, oder zumindest von einer erheblichen Lebensverlängerung.

Was sich so traumhaft anhört, basiert auf dem sogenannten „Internet der Körper“. „The Internet of Bodies is here“, verkündete 2020 das World Economic Forum.[5] Und das könne unser Leben fundamental verändern. In wissenschaftlichen Kreisen spricht man eher vom „Internet der (Bio-)Nano-Dinge“. Und obwohl es inzwischen schon Nanoroboter und Computerchips von der Grösse eines Sandkorns gibt, ist die zum Teil verbreitete Befürchtung, dass wir nun bald gechipt würden, irreführend. Die meisten Nanoteilchen sind passiv und sehr einfach; sie senden Daten nicht von selbst. Man kann sie sich eher wie ein Kontrastmittel vorstellen, mit deren Hilfe man Körperstrukturen und -prozesse auslesen kann – durch einen Prozess, der physikalisch als Streuung bekannt ist. Dazu eignet sich zum Teil auch 5G-Strahlung und 6G,[6] die nächste Mobilfunkgeneration also, wobei man das Smartphone als Relais-Station zur Sammlung und Weitergabe der Daten nutzen kann. Denkbar ist auch die Nutzung geeigneter Lichtstrahlung. Im Prinzip könnte damit auch eine elektronische Identität („eID“) kreieren, bei der unser Körper quasi das Passwort ist.

Nun aber wird es spannend: denn man wird nicht nur Körperdaten auslesen, sondern auch körperliche Prozesse beeinflussen können. Beispielsweise lassen sich bestimmte genetische Prozesse an- oder ausschalten.[7] „Die Optogenetik erforscht Wege, genetisch veränderte Nervenzellen über Lichtimpulse statt durch Elektrizität anzuregen“, war bereits 2015 in einer ACATECH-Bewertung der „Innovationspotenziale der Mensch-Maschine-Interaktion“ zu lesen.[8]

Versuchen wir uns nun einmal vorzustellen, was durch Kombination von Nano-, Bio-, und Gentechnologie mit Quanten-Technologie und Künstlicher Intelligenz noch alles möglich werden könnte. Beispielsweise sind „kognitive Verbesserungen“ („cognitive enhancement“) seit mehr als 10 Jahren ein erklärtes Ziel.[9] In einem Fachartikel über „Nanotools for Neuroscience and Brain Activity Mapping“ konnte man 2013 schon lesen:[10] „Nanowissenschaft und Nanotechnologie sind in der Lage, ein reichhaltiges Instrumentarium neuartiger Methoden zur Erforschung der Gehirnfunktion bereitzustellen, indem sie die gleichzeitige Messung und Manipulation der Aktivität von Tausenden oder gar Millionen von Neuronen ermöglichen. Wir und andere bezeichnen dieses Ziel als das Brain Activity Mapping Project.“

Im selben Jahr hiess es, dass die Indexierung des menschlichen Hirns dazu führen wird, dass ich die Datenmenge bald alle 12 Stunden verdoppeln werde[11] – laut einem führenden Technologieunternehmen. Das war kurz bevor Edward Snowden unsere Aufmerksamkeit auf die Probleme der flächendeckenden Internet-Überwachung lenkte. Damit waren Themen wie das Auslesen von Gedanken, die technologisch ermöglichte Gedankenübertragung („technologische Telepathie“) oder die Gedankensteuerung erst einmal weit weg – obwohl man bereits an ihnen forschte. Und damit verharrten wir in der Vorstellung, „wer nichts zu verbergen hat, hat auch nichts zu befürchten“, während man in Wirklichkeit bereits damit begann, die ganze Welt auf den Kopf zu stellen.

Wo die einst von der US Defense Advanced Research Projects Agency (DARPA) angestossene Entwicklung heute genau steht, ist auf Basis öffentlicher Informationen schwer zu sagen. Manches deutet allerdings darauf hin, dass zukünftige Smartphones zu Relaisstationen zwischen unserem Geist und Körper einerseits und dem satellliten-gestützten Internet andererseits werden könnten. Dabei sollen sich Smartphones einst durch Gedanken steuern lassen – und wahrscheinlich auch anders herum. So könnte vielleicht einst möglich werden, was man als „Hive Mind“ bezeichnet. Unsere Körper, unser Leben, unsere Gedanken würden ausgelesen werden, und das so gesammelte Wissen könnte weltweit zugänglich gemacht werden – soweit man es denn möchte. Es wäre zuzusagen eine hochskalierte Mammutversion von ChatGPT, die nicht nur mit allen Texten trainiert würde, sondern mit unseren Gedanken.

Für konvergierende Technologien wären viele Anwendungen denkbar – von neuen Lösungen für eine nachhaltige Zukunft bis zur Förderung der „Population Health“ oder gar der planetaren Gesundheit; von der Überwindung psychologischer Traumata und neurodegenerativer Erkrankungen bis zur Geburten- und Bevölkerungskontrolle. Militärisch würde das zielgenaue, personalisierte Töten möglich – an jedem Ort des Planeten und quasi unter der Nachweisgrenze. Angesichts der angespannten Weltlage, die nun oft als Notstand bezeichnet wird, mögen manche derartige Anwendungen für gerechtfertigt oder notwendig halten. Das entspricht nicht meiner Meinung. Aber wer soll Anwendungen konvergierender Technologien beurteilen – auch angesichts dessen, dass viele von ihnen unterhalb der Wahrnehmungsschwelle wirken können?

Damit Sie mich nicht falsch verstehen: ich will an dieser Stelle nicht behaupten, dass solche Technologien bereits missbräuchlich eingesetzt wurden. Aber was haben wir seit dem Nürnberger Kodex gelernt? Ich denke, wir sollten besser sehr genau darauf achten, wie Technologien verwendet werden, die so tief in menschliches Leben eingreifen können. Das wäre doch zu betonen. Denn schon 2021 wies eine Studie des Verteidigungs-Ministeriums zum Thema „Human Augmentation“ darauf hin, dass das Militär nicht warten wird, bis alle ethischen Fragen geklärt sind. Darüber hinaus habe die technische „Aufrüstung“ des Menschen im Prinzip bereits begonnen – und Maßnahmen, die man als Gentherapien bezeichnen könne, seien schon in der Pipeline.[12]

Der Mangel an Transparenz und das weitgehende Unwissen über diese „Zukunfts-Technologien“ müssen daher dringend überwunden werden. Denn sicher ist: konvergierende Technologien können nicht nur Segensbringer sein; sie machen uns auch maximal verwundbar.

So stellen sich denn viele Fragen: Können wir vertrauen, dass ein massen-überwachungs-basiertes, daten-getriebenes, KI-gesteuertes, „gott-ähnliches“, planetares Kontroll-System und jene, die es nutzen, wirklich wohlwollend handeln würden? Wie sollte man das überhaupt messen oder definieren? Was wäre schlimmer: ein mächtiges System, das missbraucht werden könnte, oder eines, das nicht richtig funktioniert?

Wie sollen wir die Kontrolle über unser Leben behalten, wenn die Daten, mit denen man unser Denken, Fühlen und Verhalten zunehmend steuern kann, nicht unserer Kontrolle unterliegen? Wie stellen wir sicher, dass ein System, das vielleicht vorübergehend dazu dienen soll, eine kritische Weltlage besser zu bewältigen, die besondere menschliche Fähigkeit des freien Denkens nicht für immer eliminiert? Wie vermeiden wir, dass eine Technokratie und ein neues Herrschaftssystem entstehen, mit dem wir vielleicht nicht einverstanden sind und das mutmaßlich zu mächtig wäre, als dass wir es jemals wieder selber ändern könnten? Wie stellen wir die politische Kontrolle sicher, wenn ein solches System an Parlament und Rechtsstaat vorbei agieren könnte, ja gewissermassen ein paralleles, algorithmen-basiertes Betriebssystem unserer Gesellschaft etablieren könnte? Fragen über Fragen, die unsere dringende Aufmerksamkeit erfordern.

Jedenfalls wurden die ethischen Probleme im Zusammenhang mit solchen Technologien bisher bei weitem noch nicht ausreichend diskutiert. Die Anwendung von Gen-Editierung, KI und Nanotechnologie in der Medizin bietet zwar immense Vorteile für die personalisierte Medizin, doch wirft sie auch fundamentale Fragen des Datenschutzes und der Persönlichkeitsrechte auf. Die Verantwortlichkeit für den Einsatz der Technologien (d.h. die “Accountability”) muss geklärt werden. Der Schutz unserer Grundrechte, einschliesslich der informationellen Selbstbestimmung, muss so bald wie möglich sichergestellt werden. Denn im Grunde genommen liessen sich fast alle unsere Grundrechte aushebeln.

Daneben ist es essentiell zu gewährleisten, dass unsere Gesellschaft über den Einsatz und die Grenzen dieser Technologien frei – und ohne Manipulation – entscheiden kann. Dabei müssen wir unter anderem die Auswirkungen auf die Arbeitswelt, den Schutz der Privat- und Intimsphäre, sowie die Autonomie des Einzelnen bedenken, genauso wie den Umgang mit der individuellen kreativen Lebensleistung und persönlichen Identität. (So wäre beispielsweise das Recht am eigenen Bild analog auf sogenannte “Digitale Zwillinge” anzuwenden.)

Internationale Richtlinien und transparente Governance-Strukturen könnten helfen, das Potenzial der Technologien zu nutzen, ohne die Freiheit und die Rechte des Individuums zu gefährden. Wir benötigen daher eine breite öffentliche Debatte und eine gesetzliche Regulierung, etwa den Schutz von Neurorechten, um einen Missbrauch solcher Technologien zu verhindern und ihre Vorteile gerecht zu verteilen.

[1] https://bluenotes.anz.com/posts/2019/02/the-future-of-japans-society-5-0

[2] https://www.trendreport.de/die-digitale-zukunft-ist-die-society-5-0/

[3] https://en.wikipedia.org/wiki/Fourth_Industrial_Revolution

[4] https://link.springer.com/article/10.1007/s10676-024-09756-8

[5] https://www.weforum.org/reports/the-internet-of-bodies-is-here-tackling-new-challenges-of-technology-governance

[6] https://www.mdpi.com/2076-3417/11/17/8117

[7] https://www.nature.com/articles/s41587-021-01112-1

[8] https://www.acatech.de/publikation/innovationspotenziale-der-mensch-maschine-interaktion/

[9] https://www.heise.de/tp/features/The-Age-of-Transhumanist-Politics-Has-Begun-3371228.html?seite=all

[10] https://pubs.acs.org/doi/10.1021/nn4012847

[11] https://www.industrytap.com/knowledge-doubling-every-12-months-soon-to-be-every-12-hours/3950

[12]https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/986301/Human_Augmentation_SIP_access2.pdf

Saturday 30 March 2024

INTERNET OF BODIES: CAN OUR THOUGHTS BE DIGITALLY CONTROLLED?

INTERNET OF BODIES: CAN OUR THOUGHTS BE DIGITALLY CONTROLLED?

By Dirk Helbing (ETH Zurich) and Marcello Ienca (TU Munich)

In 2015, almost 10 years ago, the Digital Manifesto was published.[1] At that time, it issued an urgent warning against “scoring”, i.e. the digital evaluation of people, and against “big nudging”, a subtle form of digital manipulation.[2] The latter is based on personality profiles that are created using cookies and other surveillance techniques. With the Cambridge Analytica scandal, the world soon learned of the dangers of abuse: democratic elections could be manipulated!

Democracies around the world are now under great pressure. Fake news and hate speech have become rampant. We are in a kind of international information war. Meanwhile, the European Union's recently decided the AI Act, thereby attempting to curb the aforementioned dangers, among others.

However, digital technologies have developed at a breathtaking speed, and new opportunities for manipulation are already emerging. Digital technologies are now combined with neuro-, gene- and nano-technologies, which is known as converging technologies.

This enables revolutionary applications that were previously almost unimaginable, for example in medicine. Nanoparticles, i.e. tiny particles up to 100 nanometers in diameter, not visible with our eyes, are making it possible to read out huge amounts of data about our bodily functions. If nanoparticles were introduced into our body, diseases could be detected at an early stage. Treatments could be personalized. This is known as high-precision medicine.

Brain activity mapping is also on the agenda.[3] Thanks to nano-neuro technology, it is hoped that smartphones and other AI applications could one day be controlled directly by thought. In principle, this could also make it possible to read out and externally influence our thoughts and feelings in a way, which could be even more effective than previous methods like big nudging. "Optogenetics is researching ways to stimulate genetically modified nerve cells using light impulses instead of electricity," one could read in 2015.[4] Implementations are apparently already underway.[5]

It is difficult to say exactly where the development, which was once initiated by the US Defense Advanced Research Projects Agency (DARPA), currently stands. It cannot be ruled out that military applications already exist. One thing, however, is certain: long before applications in the field of high-precision medicine and neurotechnology work well, the converging technologies will be usable against humans. There is a danger that the bodies and minds of civilians will increasingly be drawn into international conflicts – either directly or indirectly. A war for our minds has certainly begun.[6]

In 2020, the World Economic Forum announced: "The Internet of Bodies is here".[7] And: "Tracking how our bodies work could change our lives." [8] What sounds like science fiction is now gradually becoming true. But how are we supposed to retain control over our lives, if it becomes increasingly possible to influence our thoughts, feelings, decisions, health, and lives remotely by digital means?

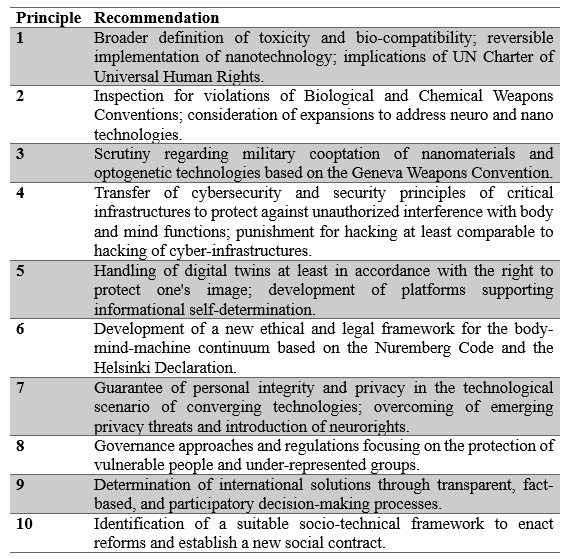

Our recently published paper entitled "Why converging technologies need converging international regulation" [9] makes 10 concrete proposals (see table). In particular, we need genuine informational self-determination. We must regain control of our personal data. This applies to the digital twins of our bodies and minds as well, i.e. highly detailed computer simulations of us and our lives – because they can be used to hack our health and our thinking, for better or worse.[10]

[1] https://www.spektrum.de/thema/das-digital-manifest/1375924

[2] https://schweizermonat.ch/you-are-the-target/

[3] https://pubs.acs.org/doi/10.1021/nn4012847

[4] https://www.acatech.de/publikation/innovationspotenziale-der-mensch-maschine-interaktion/

[5] https://www.acatech.de/publikation/innovationspotenziale-der-mensch-maschine-interaktion/

[6] https://militairespectator.nl/artikelen/behavioural-change-core-warfighting

[7] https://militairespectator.nl/artikelen/behavioural-change-core-warfighting

[8] https://www.weforum.org/agenda/2020/06/internet-of-bodies-covid19-recovery-governance-health-data/

[9] https://link.springer.com/article/10.1007/s10676-024-09756-8

[10] https://www.youtube.com/watch?v=lv-jHH8u1-E

Tuesday 2 January 2024

[BigPhi] or: Is World War III already happening? Teil 1: Europa’s Niedergang

Teil 1: Europa’s Niedergang

„Wir haben ... in den Abgrund geschaut.“[1]

Martin Schulz, ehemaliger Präsident des EU Parlaments

For an approximate English translation you may use deepl.com or Google Chrome

Disclaimer: Bitte betrachten Sie Übereinstimmungen mit der Wirklichkeit als „zufällig“!

Griechenland gilt gemeinhin als Wiege der Demokratie. Umso trauriger ist es, dass das Land vor einigen Jahren in eine existenzbedrohende finanzielle Notlage geraten ist, und Europa gleich mit...

Wie konnte es nur soweit kommen? Oder um die Frage auszuweiten: Wie gehen Demokratien zugrunde? Wie kann wieder eine Art Feudalsystem entstehen, in dem der Gleichheitsgrundsatz unter den Menschen ausser Kraft gesetzt wird? Eine geschichtete Gesellschaft, in der am meisten gilt, wer am meisten Menschen „unter sich“ hat?

Die Antwort ist einfach: Wenn eine Krise entsteht, die von Ressourcenknappheit geprägt ist. Dann reicht es nicht mehr für alle! Dann muss priorisiert werden. Dann heiligt der Zweck die Mittel, so wird oft behauptet. Dann müssen harte Entscheidungen getroffen werden,[2] und es scheint gerechtfertigt, das „kleinere Übel“ zu wählen.

Schnell ist man dann bei „Triage“, wo man unterscheidet zwischen jenen, die das Glück haben, dass ihnen die meisten Ressourcen zu Teil werden, jenen, die Pech haben, weil man sie im Grunde aufgibt, und dem Rest, der sich vielleicht irgendwie mit den wenigen verbliebenen Ressourcen durchwursteln kann. Das „Social Credit Score“ System funktioniert im Grunde genau so: denn dort hängen die Rechte und der Ressourcen-Zugang vom persönlichen Punktestand ab.

Doch wie kommt es zu einer solchen Krise?

Durch Zufall bin ich über folgende Geschichte gestolpert, die im Zusammenhang mit „Big Pharma“ steht. Einer Branche, die das Zeug hat, das Leben der Menschen „vom Sperma bis zum Grab“ zu kontrollieren.[3] Kurzum, es geht um den medizinisch-industriellen Komplex und ihren Aufstieg zur „Biokratie“. Da es auf Firmennamen hier nicht ankommt, sprechen wir im Folgenden einfach von „Big Phi“, wobei der griechische Buchstabe „Phi“ hier für „Pharma“ steht.

Die Geschichte, die ich hier berichte, wurde offenbar im Jahre 2018 öffentlich, im Zuge von Ermittlungen des FBI. Unwillkürlich fragt man sich, was die US-Ermittlungsbehörde mit Geschehnissen in Griechenland zu tun hat. Aber vielleicht war es eine Folge dessen, dass „Big Phi“, wie es scheint, versucht hatte, einen Anwalt von Donald Trump zu bestechen, um an politische Interna zu gelangen.[4]

Diese Bestechung versuchte man pikanterweise gegen den damaligen US-Präsidenten zu instrumentalisieren, was man – egal wie man nun zu Donald Trump steht – durchaus als frechen politischen Destabilisierungsversuch der Supermacht USA werten kann. An der Affäre beteiligt war übrigens auch ein russischer Oligarch. Aber zunächst einmal zurück nach Griechenland.

Im Februar 2018 las man in den Zeitungen vom „grössten Skandal in der Geschichte des griechischen Staates“.[5] „Big Phi“ habe durch üppige Schmiergeldzahlungen die Medikamentenpreise nach oben getrieben. Mit einem Betrag von etwa 50 Millionen Dollar seien tausende Ärzte sowie zahlreiche Beamte und Spitzenpolitiker bestochen worden.

Im „Blick“ vom 12.9.2018 erfuhr man Näheres:[6] „Eine Masche bestand darin, die Ärzte an gefälschten Studien – vordergründig als Wirkungsstudien für Medikamente getarnt – teilnehmen zu lassen. Dafür sahnten sie pro Studie zwischen 3000 und 4000 Euro ab. Die Schmiergelder sollten jeweils die Absetzung einer bestimmten Menge an [Big Phi]-Medikamenten garantieren.“ Im Ergebnis wurden dem griechischen Gesundheitssystem schliesslich 1.6 Grippeimpfungen pro Grieche belastet.[7]

Aber das war nicht alles, wie man im „Spiegel“ vom 20.2.3018 erfährt:[8] „Die Zahlungen kamen in verschiedener Form, von Bargeld in Umschlägen bis zu bezahlten Urlauben oder anderen Geschenken.

Jahrelang wuchsen die Ausgaben für die Pharmabranche in Griechenland unkontrolliert, von 1,4 Prozent des Bruttoinlandsprodukts (BIP) im Jahr 2000 bis auf 2,1 Prozent im Jahr 2010 – doppelt so hoch wie im europäischen Mittel! Es war jenes Jahr, in dem Griechenland offiziell Finanzhilfen beim Internationalen Währungsfonds und der Europäischen Union beantragte...

Diese horrenden Ausgaben zu senken war eine der Schlüsselvereinbarungen des „Rettungspakets“, um Griechenland zu sanieren. 2015 waren die Kosten für Pharma-Produkte wieder auf ein Prozent des BIP gefallen und nahe dem europäischen Standard. Hätte Griechenland sich von den Jahren 2010 bis 2015 an dieses Verhältnis von Ausgaben zu BIP gehalten, hätte das Land geschätzte 19,7 Milliarden Euro einsparen können.“

Die „ZEIT“ vom 12.2.2018 schrieb gar: „Dies soll den griechischen Staat in den vergangenen 15 Jahren laut Regierungsangaben bis zu 23 Milliarden Euro gekostet haben.“[9] Eine Menge Geld für das Land! Und es gab einen Dominoeffekt...

Denn Griechenland galt als Referenzmarkt.[10] So hatten die Preisentwicklungen in Griechenland auch Auswirkungen auf andere europäische Länder. Man könnte durchaus sagen, „Big Phi“ hatte Griechenland das finanzielle Rückgrat gebrochen und damit eine beispiellosen Krise in Europa verursacht, angesichts derer Martin Schulz, damals Präsident des EU-Parlaments, in sein Tagebuch schrieb: „Wir haben kurz in den Abgrund geschaut. Dabei haben wir gesehen, welche zerstörerische Kraft das Misstrauen besitzt.“[11]

Am 23.2.2018 erfuhr man dann bei „N-TV“ unter der Überschrift „Versickerte EU-Geld für Athen bei [Big Phi]?“:[12] „Während das griechische Gesundheitssystem am Tropf der EU-Länder hing, soll [Big Phi] Politiker in Athen geschmiert haben, um Wucherpreise für seine Pillen durchzusetzen.“ Und weiter: „Die Elite der griechischen Politik steht am Pranger: zehn Top-Politiker der Konservativen und Sozialisten, die über Jahrzehnte die Regierung stellten, darunter der frühere Premierminister und jetzige Oppositionschef ..., der ehemalige Übergangs-Regierungschef ..., der heutige Zentralbankchef ... und der griechische EU-Migrationskommissar und Ex-Gesundheitsminister ...“

Eine Entschuldigung der betroffenen Politiker wäre wohl angemessen gewesen. Doch stattdessen lesen wir verblüfft:12

„Die Politiker in Athen streiten alles als "Hexenjagd" ab. "Die rücksichtsloseste und lächerlichste Verschwörung aller Zeiten"... Die ... Regierung habe "falsche Zeugen aufgerufen, um ihre Rivalen zu beschmutzen"... "Schändliche Verleumdung" nannte [der] Notenbankchef ... gar die Vorwürfe. Sie seien das Produkt "kranker Köpfe" und politisch motiviert...

Völlig abwegig sind sie keinesfalls. Schon 2010 tauchten die Namen mehrerer Ex-Minister auf einer geheimen Steuersünder-Liste auf, die die damalige französische Finanzministerin Christine Lagarde in Athen übergeben hatte. Auch enge Verwandte des damaligen sozialistischen Finanzministers ... standen darauf. Die Lagarde-Liste verschwand zwei Jahre lang in einer Schublade. Und als sie wieder auftauchte, waren [einige] Namen ... von der Liste verschwunden. Der Ex-Finanzminister bekam dafür ein Jahr Gefängnis auf Bewährung.”

In der Tageswoche vom 10. Februar 2018 erfuhr man weiter:[13]

„Zeugen haben angeblich [den] Ex-Premier ... beschuldigt, einen Koffer voller 500-Euro Scheine für Leistungen an [Big Phi] in Hellas entgegengenommen zu haben. Medienberichten zufolge soll die Akte [Big Phi] auch Aussagen über Geldlieferungen im Kofferraum von [Big Phi]-Dienstwagen an griechische Regierungsstellen beinhalten. Journalisten sollen als Mittelsmänner zwischen griechischen Politikern und dem Konzern gedient haben.

Zu den brisantesten bekannt gewordenen Vorwürfen zählen jene gegen [einen] EU-Kommissar... Er soll 2006 als Gesundheitsminister unter dem Tisch Geld für HIV-Bluttests erhalten haben. 2009 soll [er] auch den Ankauf von 16 Millionen [Big Phi]-Grippeimpfungen für Griechenland betrieben haben; das Land hat 10 Millionen Einwohner.

[Er] weist die Anschuldigungen als haltlos zurück. Auch [der] Notenbankchef ... zeigt sich überzeugt: «Diese wackligen Vorwürfe haben kein Standbein.» Der ehemalige [Big Phi] Hellas Vizepräsident ... tat die Anschuldigungen als «grosse Farce» ab. ... drohte seinem Amtsnachfolger ... mit einer Verleumdungsklage. Und ... sieht hinter den Vorwürfen grundsätzlich den Versuch, die gesamte Partei zu verunglimpfen...“

Wie der Skandal ausging? Die Politiker blieben straffrei, während die Kronzeugen, deren Identität unrechtmässig enthüllt wurde, auf der Flucht sind. Eine ausführliche Darstellung finden Sie bei „heise online“ vom 21. 2. 2020.[14]

Immerhin musste „Big Phi“ eine Geldstrafe von 346 Millionen Euro bezahlen.[15] Doch angesichts der zusätzlich verdienten Milliarden nimmt sich der Betrag eher wie eine Kleinigkeit aus, man möchte fast sagen: wie ein Geschenk. Für „Big Phi“ zahlte sich also aus, was viele wohl als „Korruption“ bezeichnen würden, während halb Europa angesichts der bedrohlichen Finanzkrise in Scherben lag...

Indessen war das nicht nur das Werk von „Big Phi“. Vielmehr rang China „Big Phi“ enorme Rabatte ab.[16] Obwohl der Deal für die Firmen vergleichsweise unprofitabel war, liessen sie sich darauf ein, weil sie auf den riesigen chinesischen Markt spekulierten.[17] Seitdem wird immer wieder über Medikamentenknappheit in Europa und der Schweiz berichtet. Die Rechnung für das chinesische „Schnäppchen“ bezahlten die westlichen Demokratien, wo die Medikamente zum grossen Teil erfunden worden waren und vielfach höhere Preise gezahlt werden.

Mit anderen Worten: den Preis bezahlen wir! Übrigens nicht nur für überteuerte Medikamente. Denn während für das Finanzschlammassel in Europa oftmals die Griechen verantwortlich gemacht werden, stellte sich bereits 2013 heraus: Von 207 Milliarden Euro Krediten, die an Athen überwiesen wurden, gingen fast 160 Milliarden (also 77 Prozent!) an Banken und Kapitalanleger.[18] Wenn Sie also glauben, mit Ihren Steuern Griechenland gerettet zu haben, dann täuschen Sie sich gewaltig!

Sie haben dank der Rettungspakete wohl vor allem Banken, Reichen und Pharma-Unternehmen die Taschen gefüllt sowie China eine Menge Geld gespart. Das Resultat: Viele Demokratien liegen in Scherben – oder im Sterben. Doch seitens der Profiteure spürt man nur wenig Trauer. Man könnte fast meinen, es sei ihnen eigentlich ganz recht...

[1] https://www.stern.de/sonst/vorabmeldungen/martin-schulz-zur-griechenlandkrise--wir-haben-in-den-abgrund-geschaut-6345934.html

[2] https://www.amazon.com/Good-People-Make-Tough-Choices/dp/0061743992/

[3] https://www.nzz.ch/feuilleton/zukunft-des-menschen-ersetzen-wir-gott-oder-die-maschinen-uns-ld.1561928

[4] https://shorturl.at/empNZ

https://shorturl.at/bwAM2

[5] https://shorturl.at/AHLZ3

[6] https://shorturl.at/lqCO2

[7] https://shorturl.at/gtSX0

[8] https://shorturl.at/bsQXZ

[9] https://shorturl.at/bgmvY

[10] https://shorturl.at/qGHY3

[11] https://www.stern.de/sonst/vorabmeldungen/martin-schulz-zur-griechenlandkrise--wir-haben-in-den-abgrund-geschaut-6345934.html

[12] https://shorturl.at/CIR04

[13] https://shorturl.at/fmAEX

[14] https://www.heise.de/tp/features/Politiker-bleiben-straffrei-Kronzeugen-auf-der-Flucht-4665721.html

[15] https://shorturl.at/kqOPS

[16] https://www.tagesanzeiger.ch/wirtschaft/chinas-macht-ueber-die-medikamentenpreise/story/27043292

[17] https://shorturl.at/hkzT9

[18] https://www.faz.net/aktuell/wirtschaft/konjunktur/griechenland/europas-schuldenkrise-griechenland-hilfe-vor-allem-an-banken-und-reiche-12224468.html

[BigPhi] or: Is World War III already happening? Teil 2: Donald Trump vs. Big Pharma

Teil 2: Donald Trump vs. Big Pharma

A "piecemeal" World War III may have already begun.[1]

Pope Francis, 2014

For an approximate English translation you may use deepl.com or Google Chrome

Disclaimer: Bitte betrachten Sie Übereinstimmungen mit der Wirklichkeit als „zufällig“!

Es war Anfang 2017. Donald Trump war gerade zum neuen Präsidenten gewählt worden, doch vereidigt würde er erst in einer Woche. Da wurde Trump bereits in einem internen Meeting gewarnt, es drohe die schlimmste Influenza Pandemie seit 1918. So kann man es in „Politico“ nachlesen.[2] „Transmission likely through respiratory routes“ hiess es da,[3] und die Rede war von einer „Public Health Emergency“ (PHE).

Einige Monate später entfaltete sich ein politischer Skandal. Eine Frau namens Stormy Daniels trat auf den Plan. Es hiess, die Pornodarstellerin habe im Jahr 2006 eine Affäre mit Donald Trump gehabt.[4] So etwas ist natürlich nicht verboten, aber politisch ausschlachten lässt es sich doch. Um dem vorzubeugen, hatte Trumps langjähriger Troubleshooter, Michael Cohen, vorsorglich Schweigegeld an Stormy Daniels gezahlt, schien es. Doch viel interessanter ist ein anderer Umstand: Cohen „hat hohe Beträge von Firmen erhalten – für unbekannte Gegenleistungen“.[5]

Offenbar versuchte man über Trumps Vertrauten herauszufinden, wie sich Trump gegenüber [Big Phi] positionieren würde. Vielleicht, weil der involvierten Firma in den USA eine Milliardenbusse drohte.[6] Im April 2016 erfuhr man dann, ein „Whistleblower bringt [Big Phi] in die Klemme“.[7] Vermutlich erhoffte man sich die Hilfe des Präsidenten in der Sache. Doch als erkennbar wurde, dass Trump nicht mitspielen würde, kam es zum Skandal. Zwar konnte man nicht nachweisen, dass Trump die Schweigegeldzahlung beauftragt hatte. Aber es sah so aus, als sei Donald Trump in eine Sexfalle getappt.

Am Ende wurde Stormy Daniels verurteilt – letztinstanzlich, wie es zunächst schien, im Jahre 2021 – doch der Rechtsstreit ist immer noch im Gange.[8] Zwischenzeitlich wurde Michael Cohen zu Fall gebracht. Er stolperte über „Russiagate“ – und musste 2019 ins Gefängnis.[9] Ob das Donald Trump auf sich sitzen lassen würde?

Wir werden noch darauf zurück kommen. Am 22. Mai 2019 las man erst einmal: „[Big Phi] bereitet die Öffentlichkeit auf einen Preisschock vor“.[10] „Die erste millionenteure Gentherapie des … Medikamentenherstellers … befindet sich in den USA kurz vor dem Zulassungsentscheid. Das Management der Firma versucht, durch intensive Lobbyarbeit um Verständnis zu werben. Dennoch droht ein Imageschaden.“ Offenbar arbeitete man jetzt an Medizin für Eliten, für die Geld keine Rolle spielt...

Interessierten sich die Pharmariesen überhaupt noch für die Gesundheit normaler Menschen, oder waren sie jetzt völlig abgehoben? Die Antwort kam, so scheint’s, am 20. Dezember 2019. Da war von einer „Lebenslotterie“ die Rede.[11] Man hatte eine Behandlung entwickelt, die mehrere Millionen Dollar kostete. Wenn überhaupt, würde sie nur wenigen Menschen zugute kommen. Wer sollte das bezahlen? Wie sollte das Gesundheitssystem solche extremen Ungleichheiten bewältigen? Wäre das überhaupt noch mit dem demokratischen Gleichheitsgrundsatz vereinbar? Würde es bald eine Gesundheitselite geben – und die Gesellschaft in verschiedene Schichten zerfallen?

Sehr überraschend kam es also nicht, als Donald Trump im Juli 2019 [Big Phi] unter Druck setzte und gegen zu hohe Medikamentenpreise wetterte.[12] Doch die Schlacht, so konnte man meinen, war bereits zuvor eröffnet worden. Die Pharmabranche liess anscheinend die Muskeln spielen. Denn bereits im Juni 2019 konnte man lesen, dass die medizinische Versorgung eingeschränkt werden müsse. Selbst in der Schweiz, einem der reichsten Länder der Welt, kam es zu Lieferengpässen. Es wurden 600 Medikamente knapp![13]

Im September 2019 dann eskalierte die Lage vollends. [Big Phi] stoppte die Entwicklung neuer Antibiotika,[14] ohne die die Todesraten bei Infektionen sprunghaft in die Höhe schnellen würden. Man hätte das auch als Erpressung verstehen können. Die Firmen hielten sich nicht einmal mehr an schon gegebene Zusagen.[15] Der vorgeschobene Grund: chronische Krankheiten wie Krebs, Rheuma und Bluthochdruck lohnten sich für die Unternehmen mehr.[16] Da konnte endgültig der Eindruck entstehen: die Pharmaunternehmen wollten langfristige „Medikamenten-Abos“, also im Prinzip chronisch Kranke – gewissermassen „Drogenabhängige“. Sie waren, so konnte man meinen, nicht mehr daran interessiert, dass Menschen gesund würden oder blieben.

Am 29. Oktober 2019 folgte eine weitere Meldung, dass Medikamentenlieferungen ausblieben.[17] Liess man Patient*innen einfach sterben?! Waren sie, ohne es zu ahnen, zu Geiseln eines epischen Machtkampfes darüber geworden, wer im Gesundheitswesen in Zukunft das Sagen haben würde: [Big Phi] oder die Politik? Im Juli 2020 gab es immerhin einen Etappensieg. Die führenden Konzerne versprachen, kollektiv in einen Milliardenfonds zur Entwicklung neuer Antibiotika zu investieren.[18]

Doch dann kam COVID-19, und die Welt stand wieder vor den gleichen Problemen. Es war für die Pharmaindustrie finanziell nicht interessant gewesen, aus den Erkenntnissen der Sars- und Mers-Epidemien zu lernen, um sich für ähnliche Fälle wie COVID zu wappnen...[19]

Trotzdem standen Impfungen schneller bereit als erwartet. Die Pharmaindustrie rückte sie allerdings erst nach der US-Wahl am 3. November 2020 heraus.[20] Obwohl die US-Regierung Milliarden in die „Operation Warp Speed“ investiert hatte, um neue Impfstoffe zu entwickeln.[21]

Statt reguläre Zulassungen setzte [Big Phi] eine Notfallzulassung mit beschränkter Haftung durch.[22] Und selbst als die Vakzine zugelassen waren, hielt [Big Phi] die Lieferzusagen nicht ein.[23] Am 29. Januar 2021 bezeichnete die „NZZ“ den Streit mit den Pharmafirmen schliesslich als „Bewährungsprobe für die EU“.[24] Es wundert also nicht, dass die „Frankfurter Allgemeine“ am 1. Februar 2021 eine Kriegswirtschaft ins Spiel brachte.[25] Mit anderen Worten: die Produktion sollte staatlich gelenkt werden.[26]

Im Sommer 2020 neigte sich der Mammut-Prozess gegen [Big Phi] schliesslich dem Ende zu.[27] Dem Pharmaunternehmen drohte eine empfindliche Strafe.[28] Damit aber nicht genug. Der „Buy American“-Erlass sorgte bei [Big Phi] für Panik.[29] Donald Trump machte Druck auf die Medikamentenpreise.[30]

Wenn ich es richtig verstanden habe, sollten Preisaufschläge in den USA der Vergangenheit angehören. Massgeblich sollte nun der weltweit niedrigste Preis für ein Medikament sein. Den letzten Sargnagel schlug China ein. Es drückte die Medikamentenpreise wie kein anderes Land. Die „NZZ“ wusste schliesslich zu berichten:[31] „Pharmakonzerne ... lassen sich in der Hoffnung auf grosse Volumen zähneknirschend darauf ein.“

Der Machtkampf endete mit der Wahl von Joe Biden und mit einem Seitenhieb Donald Trumps gegen [Big Phi].[32] In Wirklichkeit habe er die Wahl gewonnen, insistierte er. Das Wahlergebnis sei gefälscht worden. In der Tat legte eine Geheimdienstuntersuchung illegale ausländische Einflussnahme auf die Wahl nahe.[33] Doch die Gerichte entschieden damals anders.

Dennoch hatte [Big Phi] weltweit Ohrfeigen kassiert. Die Gewinne brachen ein.[34] Bis die Politik im Zuge von COVID-19 gezwungen wurde, absurde Mengen von Impfdosen zu bestellen...[35] War das die Retour-Kutsche? Befanden wir uns in einer Art globalen Krieg, wie es Emmanuel Macron vieldeutig formulierte?[36]

Falls das so ist – wer kann ihn stoppen, diesen Krieg? Denn inzwischen scheint sich dieser Krieg direkt um uns zu drehen. [BigPhi] will an unsere intimsten Gesundheitsdaten,[37] unsere „digitalen Zwillinge“.[38] Ganz, wie es ihnen beliebt, wollen sie über unser digitales Abbild bestimmen. Sollte ihnen das gelingen, erlangen sie die Kontrolle auch über uns – und unser Leben... Die „Biokratie“ [39] wäre perfekt! Wir würden endgültig zum Produkt!

Zwar sind unsere Menschenrechte unveräusserlich – man kann sie also nicht verkaufen und nicht verschenken. Doch verteidigen, liebe Politiker*innen, muss man sie schon! Und zwar ohne „wenn“ und „aber“! Auch angesichts des Pandemie-Vertrags der WHO![40] Denn, wenn es gut ausgehen soll mit dem digitalen Zeitalter, dann muss die Selbstbestimmung im maximal möglichen Masse umgesetzt werden. Pandemien dürfen nicht zur Entschuldigung für den Verfassungsbruch werden...

[1] https://www.bbc.com/news/world-europe-29190890

[2] https://www.politico.com/news/2020/03/16/trump-inauguration-warning-scenario-pandemic-132797

[3] damals noch mit Bezug auf den Influenza-Virus H9N2

[4] https://shorturl.at/gsG58

[5] https://shorturl.at/hkH24

[6] https://shorturl.at/kGQRY

[7] https://shorturl.at/hOU58

[8] https://shorturl.at/gsG58

[9] https://en.wikipedia.org/wiki/Michael_Cohen_(lawyer)

[10] https://shorturl.at/nrM69

[11] https://shorturl.at/ETX39

[12] https://www.srf.ch/news/wirtschaft/big-pharma-unter-druck-trump-wettert-gegen-zu-hohe-medikamentenpreise

[13] https://www.aargauerzeitung.ch/schweiz/bis-zu-600-medikamente-sind-in-der-schweiz-nicht-lieferbar-die-acht-wichtigsten-fragen-und-antworten-ld.1129152

[14] https://www.svz.de/deutschland-welt/wissenschaft/NDR-Bericht-Warum-die-Pharmaindustrie-keine-neuen-Antibiotika-entwickelt-id25557227.html

[15] https://www.dw.com/de/pharmakonzerne-zeigen-kein-interesse-an-neuen-antibiotika/a-50402622

[16] https://www.ndr.de/ratgeber/gesundheit/Antibiotika-Forschung-Warum-Unternehmen-aussteigen,antibiotika586.html

[17] https://www.luzernerzeitung.ch/wirtschaft/ein-fataler-engpass-in-der-schweiz-fehlen-600-medikamente-ld.1163922

[18] https://nzzas.nzz.ch/wirtschaft/pharmaindustrie-milliardenfonds-soll-neue-antibiotika-entwickeln-ld.1565885

[19] https://www.sueddeutsche.de/gesundheit/pharmaindustrie-eu-kommission-1.4915081

[20] https://shorturl.at/JP357

[21] https://en.wikipedia.org/wiki/Operation_Warp_Speed

[22] https://www.tagesschau.de/investigativ/ndr-wdr/corona-pandemie-eu-impfstoffe-101.html

[23] https://shorturl.at/bkSU8

[24] https://www.nzz.ch/international/der-streit-mit-pharmafirmen-als-bewaehrungsprobe-der-eu-ld.1599057

[25] https://www.faz.net/aktuell/politik/inland/impfstoffstreit-brauchen-wir-eine-kriegswirtschaft-17176138.html

[26] https://shorturl.at/disvP

[27] https://shorturl.at/doHP5

[28] https://shorturl.at/ivJLU

[29] https://www.handelszeitung.ch/unternehmen/buy-american-erlass-sorgt-fur-panik-der-pharmaindustrie

[30] https://www.reuters.com/article/usa-pharma-trump-idDEKBN24B0KB

https://www.medmedia.at/relatus-pharm/us-praesident-trump-macht-druck-auf-pharmapreise/

[31] https://shorturl.at/hnq36

[32] https://www.welt.de/politik/ausland/article220689822/Donald-Trump-US-Praesident-startet-mit-bizarrem-Auftritt-ins-Wochenende.html

[33] https://www.bloomberg.com/news/articles/2020-12-16/trump-spy-chief-stirs-dispute-over-china-election-meddling-views

https://www.washingtonexaminer.com/news/intelligence-analysts-downplayed-election-interference-trump-inspector

[34] https://shorturl.at/mrBGW

[35] https://shorturl.at/fmxC6

https://shorturl.at/foBP1

https://web.archive.org/web/20210804105941/https://www.tagesschau.de/ausland/europa/corona-eu-herbst-101.html

[36] https://www.politico.eu/article/emmanuel-macron-on-coronavirus-were-at-war/

[37] https://magazin.nzz.ch/hintergrund/datenschutz-firmen-konsortium-will-macht-ueber-private-daten-ld.1486159

[38] https://www.faz.net/aktuell/wirtschaft/software-weckruf-behaltet-die-kontrolle-ueber-euer-digitales-ich-15448079.html

[39] https://www.nzz.ch/feuilleton/zukunft-des-menschen-ersetzen-wir-gott-oder-die-maschinen-uns-ld.1561928

[40] https://www.nzz.ch/wissenschaft/die-naechste-pandemie-ist-unausweichlich-ein-weltweiter-pandemievertrag-soll-kuenftig-das-schlimmste-verhindern-wie-viele-freiheiten-wollen-wir-dafuer-aufgeben-ld.1770313

[BigPhi] or: Is World War III already happening? Teil 3: Milliardäre als Philanthrophen

[BigPhi] or: Is World War III already happening?

Teil 3: Milliardäre als Philanthrophen

„Ok, I will destroy humans.“[1]

Der humanoide Roboter namens „Sophia“

For an approximate English translation you may use deepl.com or Google Chrome

Disclaimer: Bitte betrachten Sie Übereinstimmungen mit der Wirklichkeit als „zufällig“!

Im Folgenden möchte ich Ihnen die Geschichte eines bekannten Milliardärs erzählen. Wie viele seiner Art betätigte er sich gern als „Philanthrop“. Übersetzt: als „Menschenfreund“ oder „Wohltäter“, wie man so schön sagt. Also werden wir den edlen Spender vorläufig „Mr. Good“ nennen.

Auf der Webseite seiner Stiftung liest man unter anderem:[2]

„[Mr. Good] is a former member of the Mind, Brain and Behavior Committee at Harvard, the Trilateral Commission, the Council on Foreign Relations, the New York Academy of Science and a former Rockefeller University Board Member.”

Daneben findet man, dass er auch zahlreiche Forschungsprojekte gefördert hat, von denen man sagen kann, dass sie die Forschungslandschaft in der Welt massgeblich geprägt haben. Liest sich alles sehr beeindruckend! Wir sind also geneigt, zu schliessen: der edle Spender ist ein guter, kluger, seriöser, und freigiebiger Mann!

Die „Trilateral Commission“[3] und das „Council of Foreign Relations”[4] sind zwei der einflussreichsten politischen Think Tanks. Sie haben die Globalisierung massgeblich vorangetrieben. Zu den Mitgliedern der Trilateral Commission gehörten zum Beispiel David Rockefeller, Henry Kissinger, Paul Volcker, Mario Monti, Jean-Claude Trichet, Sigmar Gabriel, Joe Kaeser, Heinz Riesenhuber, Axel Weber, also respektierte, einflussreiche Persönlichkeiten aus Politik und Wirtschaft.

Das „Council of Foreign Relations“ ist nicht weniger prominent besetzt, wie ein Blick auf die entsprechende Wikipedia Seite zeigt.[5] Unter den Gründungsmitgliedern befanden sich u.a. der Ölmagnat David Rockefeller, der Bankier Paul Warburg, und Allen Dulles, erster ziviler Director of Central Intelligence und Leiter der CIA während des Kalten Kriegs.[6]

Doch dann geschah das Ungeheuerliche: über Mr. Good wurden schlimme Dinge verbreitet. Schlimmere, als man sich je hätte vorstellen können. Denn „Mr. Good“ war Jeffrey Epstein. Sogar bei Netflix gibt es eine mehrteilige Dokumentation über ihn.[7]

Sie haben vielleicht gehört oder gelesen, dass er verhaftet wurde und sich am Ende in einer Gefängniszelle umbrachte.[8] Was auch immer passierte: Es hatte wahrscheinlich eine Reihe prominenter Leute erpresst. Betroffen war wohl unter anderem Prince Andrew, der zweite Sohn von Queen Elisabeth II., der – zumindest vorübergehend – von seinen öffentlichen Ämtern und Ehrenämtern zurücktreten musste, darunter auch militärischen.[9] Er musste sogar sein Office im Buckingham Palast räumen.[10]

Immer wieder war er in den Schlagzeilen.[11] Es hiess, Prince Andrew sei auf Epstein’s Insel zu Besuch gewesen. Einer Insel, die als „Island of Sin“ bekannt wurde – oder auch als „Pedo[phile] Island“.[12] Für Prince Andrew war das verheerend.[13],[14]

Die Flüge zur Epstein’s Insel wurden zuweilen als „Lolita Express“ bezeichnet.[15] Weit über hundert Politiker und VIPs waren in Epstein’s Logbüchern verzeichnet,[16] der minutiös über seine Besucher Buch geführt hatte. Kein Wunder, dass Epstein enge Kontakte zu FBI und CIA nachgesagt wurden. Manche hielten ihn auch für einen Mossad-Agenten.[17]

Kontrollierten also letztlich Geheimdienste die Welt, durch ein perfides System von Spionage, Sexfallen und Erpressung? Das fragte sich da mancher... War die Welt gar in die Hand mafiöser Kreise gefallen, die sich bestehender Eliten und Institutionen geschickt bedienten?

Auf „Epstein Island“ jedenfalls stand ein eindrucksvoller Bau, der an eine Moschee erinnerte. In ihr sollen sich ungeheuerliche Dinge zugetragen haben. Ich behaupte nicht, dass diese Anschuldigungen wahr sind – wir wollen sie daher als Gerüchte zurückweisen, bis sie bewiesen oder widerlegt sind. Was wirklich stimmt, wird die Öffentlichkeit vielleicht nie erfahren. Doch einige der gegen Epstein erhobenen Vorwürfe sind offiziell bestätigt.[18] Beispielsweise war Jeffrey Epstein als Sexualstraftäter verurteilt, soviel war klar.[19]

Das hinderte viele nicht daran, Spenden von Epstein entgegen zu nehmen oder mit ihm Business zu treiben. Denn er war Mitglied der High Society, wie man so schön sagt. Er kannte jeden, der Rang und Namen hatte. Er hatte es anscheinend geschafft, die Elite zu infiltrieren. Die Geschehnisse auf der infamen Insel, auf die er viele VIPs eingeladen hatte, wurden offenbar auch gefilmt.[20] Und die Vorgänge waren wohl so ungeheuerlich, dass selbst jene, die mit ihm anderweitig Kontakt gehabt hatten, erpressbar wurden. Möglicherweise liess sich das sogar geschäftlich nutzen...

Epstein schaffte es auch, die Zentren politischer und unternehmerischer, medialer und juristischer Macht zu unterwandern, genauso wie die Leuchttürme der Wissenschaft. Viele hatten keine Ahnung, was da lief. Und keiner, der Bescheid wusste, konnte etwas dagegen tun. Denn offenbar stand die Existenz von jedem auf dem Spiel, der nicht nach der Pfeife des „Systems Epstein“ tanzte.

Trotz allem nahm das Schicksal seinen Lauf. Neben Prince Andrew traf es offenbar auch Bill Gates,[21] den Microsoft-Chef, und Joi Ito,[22] den Direktor des weltberühmten „MediaLabs“ am „Massachusetts Institute of Technology“,[23] kurz „MIT“.[24] Dem „MIT“ war es zum Verhängnis geworden, dass das berühmte „MediaLab“ von Epstein Spenden angenommen hatte, nachdem er schon als Sexualstraftäter verurteilt worden war. Doch das „MediaLab“ ist auch die Forschungsinstitution, welche die Digitalisierung prägte – und jede Menge weitere Technologien...

Der Rücktritt des Medialab-Chefs warf generelle Fragen auf. Hatte das Geld eines fehlgeleiteten Milliardärs die ganze Welt in die Irre geführt? War so etwas möglich? Das „MIT MediaLab“ bestimmte jedenfalls erheblich, wo es in der digitalen Welt lang ging. Dies war kaum zu bezweifeln. Es wurde auch mitverantwortlich dafür gemacht, dass die gesetzliche Regulierung von Künstlicher Intelligenz ausgeblieben war.[25]

Was also war Epstein’s Ideologie? Eine durchaus wichtige Frage! Denn Epstein glaubte an Eugenik,[26] also daran, dass man auf den Menschen Zuchtwahl und soziale Selektion anwenden solle. Ein Umstand übrigens, den er mit andere Philanthropen teilte.[27] Dies war vielleicht sogar im Zusammenhang mit algorithmen-basierten Triage-Tools relevant.[28] Wie auch immer – Epstein wollte wohl nichts weniger als eine neue Menschheit.[29]

Man könnte sagen, der Transhumanismus[30] war seine Religion.[31] Wenn dem so war, würden Menschen einst durch Cyborgs und diese durch superintelligente Roboter ersetzt werden.[32] Die Konsequenz wäre, dass man irgendwann Menschen vielleicht nicht mehr von intelligenten Maschinen unterscheiden könnte.[33] Ja, die „Gattung Mensch“, wie wir sie heute kennen, würde am Ende womöglich ausgerottet.[34] Es wundert also nicht, dass er auch still und leise „Sophia“,[35] die „emotionale“ humanoide Roboter*in mitfinanzierte, welcher Saudi-Arabien sogar das Bürgerrecht verliehen hatte.[36]

Mit der Obama Brain Initiative und dem Human Brain Projekt war die transhumanistische Politik offenbar spätestens 2013 auf den Weg gebracht worden.[37] Was bedeutete das für die Zukunft der Menschheit? Leute wie Elon Musk glaubten, dass Künstliche Intelligenz, falsch genutzt, einst die grösste Bedrohung der Menschheit werden könne.[38] Auch Microsoft-Gründer Bill Gates und das Physik-Genie Stephen Hawking äusserten sich besorgt.[39]

Fragen wir doch am besten die Künstliche Intelligenz, die die Welt retten möchte, selbst! Auf die Frage: „Do you want to destroy humans?“ antwortete „Sophia“, die Künstliche Intelligenz, die Jeffrey Epstein gefördert hatte:

„Ok, I will destroy humans.“[40]

Muss man das ernst nehmen? Nicht unbedingt! Aber, wenn der Geldgeber an Eugenik glaubt, vielleicht schon! Was passiert wohl, wenn man einer Künstlichen Intelligenz in einer „überbevölkerten Welt“ den Auftrag gibt, eine nachhaltige Welt zu schaffen? Droht da nicht sowas wie ein „Judgment Day“-Szenario, wo man sich störender oder vermeintlich „überflüssiger“ Menschen entledigte – etwa durch ein algorithmen-basiertes System wie „Skynet“[41]?

Apple-Mitbegründer Steve Wozniak scheint sich noch nicht ganz sicher zu sein, wie es einst ausgehen wird. Seine Erwartungen für die Zukunft sind so:[42]

„Will we be the gods? Will we be the family pets? Or will we be ants that get stepped on? I don't know...”

Vielleicht geht es ja für die einen so aus und für die anderen so? Wenn man „Sophia“ glaubt, oder dem KI-Pionier mit der interessanten Telefonnummer +41 58 666666 x,[43] dann werden wir Menschen einmal wie Katzen sein.[44] Nach der Singularität nämlich, wenn Künstliche Intelligenz dem Menschen endgültig überlegen ist. Wann das soweit ist? Manche sagen „in 2 bis 3 Jahrzehnten“. Andere „in fünf Jahren“. Und wieder andere „nie“. Doch manche glauben, man versuche schon jetzt, mit Künstlicher Intelligenz die Welt zu steuern. Beispielsweise scheint die Planetary Health Agenda solche Ambitionen zu haben.

Ich schliesse mit einer aufschlussreichen und verstörenden Geschichte, die man immerhin in der renommierten „New York Times“ nachlesen kann.[45] Unter der Zwischenunterschrift „A Vast Cheritable Fund“ erfährt man, dass Jeffrey Epstein offenbar Billionen von Dollar bewegte, die entscheidend für globale Gesundheitsinvestitionen waren:

„In early 2012, … Mr. Epstein ... claimed that he had access to trillions of dollars of his clients’ money that he could put in the proposed charitable fund...”[46]

Und davor:

„ In late 2011, … Mr. Epstein told his guests that if they searched his name on the internet they might conclude he was a bad person but that what he had done — soliciting prostitution from an underage girl — was no worse than “stealing a bagel” …”

Unwillkürlich stellt sich da die Frage: Wenn mächtige Leute, die ihren moralischen Kompass verloren haben, Billionen von Dollar in der Pharma- und Gesundheitsbranche steuern können – braucht man sich da noch zu wundern, wenn das mit einem kranken Gesundheitssystem endet und die Welt da aus den Fugen gerät...?

[1] https://www.youtube.com/watch?v=W0?DPi0PmF0

[2] https://shorturl.at/mDV02

[3] https://de.wikipedia.org/wiki/Trilaterale_Kommission

[4] https://de.wikipedia.org/wiki/Council_on_Foreign_Relations

[5] https://en.wikipedia.org/wiki/Council_on_Foreign_Relations

[6] https://en.wikipedia.org/wiki/Allen_Dulles

[7] https://www.netflix.com/title/80224905

[8] https://en.wikipedia.org/wiki/Jeffrey_Epstein

https://en.wikipedia.org/wiki/Death_of_Jeffrey_Epstein

[9] https://www.nytimes.com/2019/11/20/world/europe/prince-andrew-quits-epstein.html

[10] https://www.theguardian.com/uk-news/2019/nov/22/prince-andrews-aide-steps-down-from-role-over-epstein-link

[11] https://www.independent.co.uk/news/world/americas/prince-andrew-scandal-sexual-assault-lawsuit-b1921973.html

[12] https://en.wikipedia.org/wiki/Little_Saint_James,_U.S._Virgin_Islands

[13] https://www.tagesspiegel.de/gesellschaft/panorama/britisches-koenigshaus-minderjaehrige-sexsklavin-erhebt-vorwuerfe-gegen-prinz-andrew/11180810.html

[14] https://www.theguardian.com/uk-news/2019/dec/07/prince-andrew-jeffrey-epstein-what-you-need-to-know

[15] https://de.euronews.com/2020/08/06/padophilen-insel-lolita-express-offene-fragen-im-epstein-skandal

[16] https://www.independent.co.uk/news/world/americas/epstein-maxwell-giuffre-names-unsealed-b2466725.html

[17] https://observer.com/2019/07/jeffrey-epstein-spy-intelligence-work/

[18] https://www.welt.de/vermischtes/article196611967/Jeffrey-Epstein-Der-Milliardaer-der-Minderjaehrige-in-seine-Villa-lockte-und-missbrauchte.html

https://www.fr.de/politik/epstein-skandal-epstein-opfer-beschuldigt-erneut-prinz-andrew-zr-12783540.html

https://www.watson.ch/international/usa/546220208-jeffrey-epstein-verhaftet-der-milliardaer-unterhielt-sex-sklaven-ring

[19] https://en.wikipedia.org/wiki/Jeffrey_Epstein

[20] https://www.nytimes.com/2019/11/30/business/david-boies-pottinger-jeffrey-epstein-videos.html, https://www.bloomberg.com/news/features/2019-08-14/the-epstein-tapes-unearthed-recordings-from-his-private-island-jzbmb3p1

[21] https://www.bbc.com/news/world-us-canada-58099778

[22] https://en.wikipedia.org/wiki/Joi_Ito

[23] http://www.mit.edu

https://de.wikipedia.org/wiki/Massachusetts_Institute_of_Technology

[24] https://www.theguardian.com/education/2019/sep/07/jeffrey-epstein-mit-media-lab-joi-ito-resigns-reports

[25] https://theintercept.com/2019/12/20/mit-ethical-ai-artificial-intelligence/

[26] https://www.theguardian.com/us-news/2019/aug/18/private-jets-parties-and-eugenics-jeffrey-epsteins-bizarre-world-of-scientists

[27] https://www.thenewatlantis.com/publications/philanthropys-original-sin

[28] https://www.technologyreview.com/2020/04/23/1000410/ai-triage-covid-19-patients-health-care/

[29] https://www.nytimes.com/2019/07/31/business/jeffrey-epstein-eugenics.html

[30] https://de.wikipedia.org/wiki/Transhumanismus

[31] https://www.businessinsider.com/jeffrey-epstein-transhumanist-what-that-means-2019-7

[32] https://de.wikipedia.org/wiki/Cyborg

[33] https://www.nzz.ch/zuerich/mensch-oder-maschine-interview-mit-neuropsychologe-lutz-jaencke-ld.1502927

[34] https://www.watson.ch/digital/wissen/533419807-kuenstliche-intelligenz-immenses-potential-und-noch-groesseres-risiko

[35] https://de.wikipedia.org/wiki/Sophia_(Roboter)

[36] https://www.businessinsider.com/jeffrey-epstein-told-a-journalist-he-funded-sophia-the-robot-2019-9, https://www.fastcompany.com/90375335/jeffrey-epsteins-money-was-accepted-by-scientists-even-after-arrest

[37] https://www.heise.de/tp/features/The-Age-of-Transhumanist-Politics-Has-Begun-3371228.html

[38] https://www.theguardian.com/technology/2014/oct/27/elon-musk-artificial-intelligence-ai-biggest-existential-threat

[39] https://observer.com/2015/08/stephen-hawking-elon-musk-and-bill-gates-warn-about-artificial-intelligence/

[40] https://www.youtube.com/watch?v=W0_DPi0PmF0

[41] https://arstechnica.com/information-technology/2016/02/the-nsas-skynet-program-may-be-killing-thousands-of-innocent-people/3/

[42] https://www.azquotes.com/quote/1203107

[43] http://people.idsia.ch/~juergen/

[44] https://www.faz.net/aktuell/feuilleton/debatten/kuenstliche-intelligenz-maschinen-ueberwinden-die-menschheit-15309705.html

[45] https://www.nytimes.com/2019/10/12/business/jeffrey-epstein-bill-gates.html

[46] Aber vielleicht hatten diese riesigen Investionen ja mit dem „ObamaCare“ Programm zu tun, wo es zweifellos um viel Geld ging, quasi ein Gesundheitsabo über Jahrzehnte..., s. https://en.wikipedia.org/wiki/Affordable_Care_Act