Making the invisible hand work

by Dirk Helbing

"Any sufficiently advanced technology is indistinguishable from magic."

Sir Arthur Clarke

This is Chapter 6 of The Automation of Society Is Next: How to Survive the Digital Revolution. The book is available at Amazon here.

Complex dynamical systems are difficult to control because they have a natural tendency to self-organize, driven by the inherent forces between their system components. But self-organization may have favorable results, too, depending on how the system's components interact. By slightly modifying these interactions – usually interfering at the right moment in a minimally invasive way – one can produce desirable outcomes, which even resist moderate disruptions. Such assisted self-organization is based on distributed control. Rather than imposing a certain system behavior in a top-down way, assisted self-organization reaches efficient results by using the hidden forces, which determine the natural behavior of a complex dynamical system.

We have seen that the behavior of complex dynamical systems – how they evolve and change over time – is often dominated by the interactions between the system's components. That's why it is hard to predict how the system will behave, and why it is so difficult to control complex systems. In an increasingly complex and interdependent world, these kinds of challenges will become ever more relevant. Even if we had all information and the necessary means at our disposal, we couldn't hope to compute, let alone engineer, an optimal system state because the computational requirements are just too high. For this reason, the complexity of such systems undermines the effectiveness of centralized control. Such centralized control efforts might not only be ineffective but can make things even worse. Steering society like a bus cannot work, even though nudging seems to enable it. It rather undermines the self-organization that is taking place on many levels of our society all the time. In the end, such attempts result in "fighting fires" – struggling to defend ourselves against the most disastrous outcomes.

If we're to have any hope of managing complex systems and keeping them from collapse or crisis, we need a new approach. As Albert Einstein (1879-1955) said: "We cannot solve our problems with the same kind of thinking that created them." Thus, what other options do we have? The answer is perhaps surprising. We need to step back from centralized top-down control and find new ways of letting the system work for us, based on distributed, "bottom-up" approaches. For example, as I will show below, distributed real-time control can counter traffic jams, if a suitable approach is adopted. But this requires a fundamental change from a component-oriented view of systems to an interaction-oriented view. That is, we have to pay less attention to the individual system components that appear to make up our world, and more to their interactions. In the language of network science, we must shift our attention from the nodes of a network to its links.

It is my firm belief that once this change in perspective becomes common wisdom, it will be found to be of similar importance as the discovery that the Earth is not the center of the universe — a change in thought which led from a geocentric to a heliocentric view of the world. The paradigm shift towards an interaction-oriented, systemic view will have fundamental implications for the way in which all complex anthropogenic systems should be managed. As a result, it will irrevocably alter the way economies are managed and societies are organized. In turn, however, an interaction-oriented view will enable entirely new solutions to many long-standing problems.

How does an interaction-oriented, distributed control approach work? When many system components respond to each other locally in non-linear ways, the outcome is often a self-organized collective dynamics, which produces new ("emergent") macro-level structures, properties, and functions. The kind of outcome, of course, depends on the details of the interactions between the system components, so the crucial point is what kinds of interactions apply. As colonies of social insects such as ants show, it is possible to produce amazingly complex outcomes by surprisingly simple local interactions.

One particularly favorable feature of self-organization is that the resulting structures, properties and functions occur by themselves and very efficiently, by using the forces within the system rather than forcing the system to behave in a way that is against "its nature". Moreover, the so resulting structures, properties and functions are stable with regard to moderate perturbations, i.e. they tend to be resilient against disruptions, as they would tend to reconfigure themselves according to "their nature". With a better understanding of the hidden forces behind socio-economic change, we can now learn to manage complexity and use it to our advantage!

Self-organization "like magic"

Why is self-organization such a powerful approach? To illustrate this, I will start with the example of the brain and then turn to systems such as traffic and supply chains. Towards the end of this chapter and in the rest of this book, we will then explore whether the underlying success principles might be extended from biological and technological systems to economic and social systems.

Our bodies are perfect examples of the virtues of self-organization in that they constantly produce useful functionality from the interactions of many components. The human brain, in particular, is made up of 100 billion information-processing units, the neurons. On average, each neuron is connected to about a thousand others, and the resulting network exhibits properties that cannot be understood by looking at a single neuron in isolation. In concert, this neural network doesn't only control our motion, but it also supports our decisions, and the mysterious phenomenon of consciousness. And yet, even though our brain is so astounding, it consumes less energy than a typical light bulb! This shows how efficient self-organization can be.

However, self-organization does not mean that the outcomes of the system would necessarily be desirable. Traffic jams, crowd disasters, or financial crises are good examples for this, and that's why self-organization may need some "assistance". By modifying the interactions (for instance, by introducing suitable feedbacks), one can let different outcomes emerge. The disciplines needed to find the right kinds of interactions in order to obtain particular structures, properties, or functions are called "complexity science" and "mechanism design".

"Assisted self-organization" slightly modifies the interactions between system components, but only where necessary. It uses the hidden forces acting within complex dynamical systems rather than opposing them. This is done in a similar way as engineers have learned to use the forces of nature. We might also compare this with Asian martial arts, where one tries to take advantage of the forces created by the opponent.

In fact, the aim of assisted self-organization is to intervene locally, as little as possible, and gently, in order to use the system's capacity for self-organization to efficiently reach the desired state. This connects assisted self-organization with the approach of distributed control, which is quite different from the nudging approach discussed before.[1] Distributed control is a way in which one can achieve a certain desirable mode of behavior by temporarily influencing interactions of specific system components locally, rather than trying to impose a certain global behavior on all components at once. Typically, distributed control works by helping the system components to adapt when they show signs of deviating too much from their normal or desired state. In order for this adaptation to be successful, the feedback mechanism must be carefully chosen. Then, a favorable kind of self-organization can be reached in the system.

In fact, the behavior that emerges in a self-organizing complex system isn't just random, nor is it totally unpredictable. Such systems tend to be drawn towards particular stable states, called "attractors". Each attractor represents a particular type of collective behavior. For example, Fig. 6.1 shows six typical traffic states, each of which is an attractor. In many cases, including freeway traffic, we can understand and predict these attractors by using computer models to simulate the interactions between the system components (here, the cars). If the system is slightly disrupted, it will usually return to the same attractor state. This is an interesting and important feature. To some extent, this makes the complex dynamical system resilient to small and moderate disruptions. Larger disruptions, however, will cause the system to settle in a different attractor state. For example, free-flowing traffic might be disrupted to the point that a traffic jam is formed, or one congestion pattern gives way to another one.

Figure 6.1: Examples of congested traffic states.[2]

The physics of traffic

Contrary to what one might expect, traffic jams are not just queues of vehicles that form behind bottlenecks. Scientists studying traffic were amazed when they discovered the large variety and complexity of empirical congestion patterns in the 1990s. The crucial question was whether such patterns are understandable and predictable enough such that new ways of avoiding congestion could be devised. In fact, by now a mathematical theory exists, which can explain the fascinating properties of traffic flow and even predict the extent of congestion and the resulting delay times.[3] It posits, in particular, that all traffic patterns either correspond to one of the fundamental congestion patterns shown above or are "composite" congestion patterns made up of the above "elementary" patterns. This has an exciting analogy with physics, where we find composite patterns made up of elementary units, too (such as electrons, protons, and neutrons).

I started to work on this traffic theory when I was a postdoctoral researcher at the University of Stuttgart, Germany. Together with Martin Treiber and others, I studied a model of freeway traffic in which each vehicle was represented by a "computer agent". In this context, the term "agent" refers to a vehicle being driven along a road in a particular direction with a certain "desired speed". Obviously, however, the simulated vehicle would slow down to avoid collisions whenever needed. Thus, our model attempted to build a picture of traffic flow from the bottom up, based on simple interaction rules between individual cars.

It was exciting to discover that we could reproduce all of the empirically observed elementary congestion patterns, just by varying the traffic flows on a simulated freeway stretch with an entry lane. The results can be arranged in a so-called "phase diagram" (see Fig. 6.2 below). This diagram illustrates how the traffic flows on the freeway and the entry lane affects the traffic pattern, and the conditions under which a particular traffic pattern gives way to another one.

Figure 6.2: Schematic "phase diagram" illustrating the flow conditions leading to different kinds of traffic states on a freeway with a bottleneck (adapted from Ref. 2).

A capacity drop, when traffic flows best!

Can we use this understanding to reduce congestion, even though traffic often behaves in counter-intuitive ways, as demonstrated by phenomena such as "phantom traffic jams" and the puzzling "faster-is-slower effect" (see Information Box 6.1)? Yes, indeed. Our findings imply that most traffic jams are caused by a combination of three factors: a bottleneck, dense traffic and a disruption to the traffic flow. Unfortunately, the effective road capacity breaks down just when the entire capacity of the road is needed the most. The resulting traffic jam can last for hours and can increase travel times by a factor of two, five or ten. The collapse of traffic flow can be even triggered by a single truck overtaking another one!

It is perhaps even more surprising that the traffic flow becomes unstable when the maximum traffic volume on the freeway is reached – exactly when traffic is most efficient from an "economic" point of view. As a result of the resulting breakdown, about 30 percent of the freeway capacity is lost due to unfavorable vehicle interactions. This effect is called "capacity drop". Therefore, in order to prevent a capacity drop and to avoid traffic jams, we must either keep the traffic volume sufficiently below its maximum value or stabilize high traffic flows by means of modern information and communication technologies. In fact, as I will explain in the following, "assisted self-organization" can do this by using real-time measurements and suitable adaptive feedbacks, which slightly modify the interactions between cars.

Avoiding traffic jams

Since the early days of computers, traffic engineers always sought ways to improve the flow of traffic. The traditional "telematics" approach to reduce congestion was based on the concept of a traffic control center that collects information from a lot of traffic sensors. This control center would then centrally determine the best strategy and implement it in a top-down way, by introducing variable speed limits on motorways or using traffic lights at junctions, for example. Recently, however, researchers and engineers have started to explore a different and more efficient approach, which is based on distributed control.

In the following, I will show that local interactions may lead to a favorable kind of self-organization of a complex dynamical system, if the components of the system (in the above example, the vehicles) interact with each other in a suitable way. Moreover, I will demonstrate that only a slight modification of these interactions can turn bad outcomes (such as congestion) into good outcomes (such as free traffic flow). Therefore, in complex dynamical systems, "interaction design", also known as "mechanism design", is the secret of success.

Assisting traffic flow

Some years ago, Martin Treiber, Arne Kesting, Martin Schönhof, and I had the pleasure of being involved in the development of a new traffic assistance system together with a research team of Volkswagen. The system we invented is based on the observation that, in order to prevent (or delay) the traffic flow from breaking down and to use the full capacity of the freeway, it is important to reduce disruptions to the flow of vehicles. With this in mind, we created a special kind of adaptive cruise control (ACC) system, where adjustments are made by a certain proportion of self-driving cars that are equipped with the ACC system. A traffic control center is not needed for this. The ACC system includes a radar sensor, which measures the distance to the car in front and the relative velocity. The measurement data are then used in real time to accelerate and decelerate the ACC car automatically. Such radar-based ACC systems already existed before. In contrast to conventional ACC systems, however, the one developed by us did not merely aim to reduce the burden of driving. It also increased the stability of the traffic flow and capacity of the road. Our ACC system did this by taking into account what nearby vehicles were doing, thereby stimulating a favorable form of self-organization in the overall traffic flow. This is why we call it a "traffic assistance system" rather than a "driver assistance system".

The distributed control approach adopted by the underlying ACC system was inspired by the way fluids flow. When a garden hose is narrowed, the water simply flows faster through the bottleneck. Similarly, in order to keep the traffic flow constant, either the traffic needs to become denser or the vehicles need to drive faster, or both. The ACC system, which we developed with Volkswagen many years before people started to talk about Google cars, imitates the natural interactions and acceleration of driver-controlled vehicles most of the time. But whenever the traffic flow needs to be increased, the time gap between successive vehicles is slightly reduced. In addition, our ACC system increases the acceleration of vehicles exiting a traffic jam in order to reach a high traffic flow and stabilize it.

Creating favorable collective effects

Most other driver assistance systems today operate in a "selfish" way. They are focused on individual driver comfort rather than on creating better flow conditions for everyone. Our approach, in contrast, seeks to obtain system-wide benefits through a self-organized collective effect based on "other-regarding" local interactions. This is a central feature of what I call "Social Technologies". Interestingly, even if only a small proportion of cars (say, 20 percent) are equipped with our ACC system, this is expected to support a favorable self-organization of the traffic flow.[4] By reducing the reaction and response times, the real-time measurement of distances and relative velocities using radar sensors allows the ACC vehicles to adjust their speeds better than human drivers can do it. In other words, the ACC system manages to increase the traffic flow and its stability by improving the way vehicles accelerate and interact with each other.

Figure 6.3: Snapshot of a computer simulation of stop-and-go traffic on a freeway.[5]

A simulation video we created illustrates how effective this approach can be.[6] As long as the ACC system is turned off, traffic flow develops the familiar and annoying stop-and-go pattern of congestion. When seen from a bird's-eye view, it is evident that the congestion originates from small disruptions caused by vehicles joining the freeway from an entry lane. But once the ACC system is turned on, the stop-and-go pattern vanishes and the vehicles flow freely.

In summary, driver assistance systems modify the interaction of vehicles based on real-time measurements. Importantly, they can do this in such a way that they produce a coordinated, efficient and stable traffic flow in a self-organized way. Our traffic assistance system was also successfully tested in real-world traffic conditions. In fact, it was very impressive to see how natural our ACC system drove already a decade ago. Since then, experimental cars have become smarter every year.

Cars with collective intelligence

A key issue for the operation of the ACC system is to discover where and when it needs to alter the way a vehicle is being driven. The right moments of intervention can be determined by connecting the cars in a communication network. Many cars today contain a lot of sensors that can be used to give them "collective intelligence". They can perceive the driving state of the vehicle (e.g. free or congested flow) and determine the features of the local environment to discern what nearby cars are doing. By communicating with neighboring cars through wireless car-to-car communication,[7] the vehicles can assess the situation they are in (such as the surrounding traffic state), take autonomous decisions (e.g. adjust driving parameters such as speed), and give advice to drivers (e.g. warn of a traffic jam behind the next curve). One could say, such vehicles acquire "social" abilities in that they can autonomously coordinate their movements with other vehicles.

Self-organizing traffic lights

Let's have a look at another interesting example: the coordination of traffic lights. In comparison to the flow of traffic on freeways, urban traffic poses additional challenges. Roads are connected into complex networks with many junctions, and the main problem is how to coordinate the traffic at all these intersections. When I began to study this difficult problem, my goal was to find an approach that would work not only when conditions are ideal, but also when they are complicated or problematic. Irregular road networks, accidents or building sites are examples of the types of problems, which are often encountered. Given that the flow of traffic in urban areas greatly varies over the course of days and seasons, I argue that the best approach is one that flexibly adapts to the prevailing local travel demand, rather than one which is pre-planned for "typical" traffic situations at a certain time and weekday. Rather than controlling vehicle flows by switching traffic lights in a top-down way, as it is done by traffic control centers today, I propose that it would be better if the actual local traffic conditions determined the traffic lights in a bottom-up way.

But how can self-organizing traffic lights, based on the principle of distributed control, perform better than the top-down control of a traffic center? Is this possible at all? Yes, indeed. Let us explore this now. Our decentralized approach to traffic light control was inspired by the discovery of oscillatory pedestrian flows. Specifically, Peter Molnar and I observed alternating pedestrian flows at bottlenecks such as doors.[8] There, the crowd surges through the constriction in one direction. After some time, however, the flow direction turns. As a consequence, pedestrians surge through the bottleneck in the opposite direction, and so on. While one might think that such oscillatory flows are caused by a pedestrian traffic light, the turning of the flow direction rather results from the build-up and relief of "pressure" in the crowd.

Could one use this pressure principle underlying such oscillatory flows to define a self-organizing traffic light control?[9] In fact, a road intersection can be understood as a bottleneck too, but one with flows in several directions. Based on this principle, could traffic flows control the traffic lights in a bottom-up way rather than letting the traffic lights control the vehicle flows in a top-down way, as we have it today? Just when I was asking myself this question, a student named Stefan Lämmer knocked at my door and wanted to write a PhD thesis.[10] This is where our investigations began.

How to outsmart centralized control

Let us first discuss how traffic lights are controlled today. Typically, there is a traffic control center that collects information about the traffic situation all over the city. Based on this information, (super)computers try to identify the optimal traffic light control, which is then implemented as if the traffic center were a "benevolent dictator". However, when trying to find a traffic light control that optimizes the vehicle flows, there are many parameters that can be varied: the order in which green lights are given to the different vehicle flows, the green time periods, and the time delays between the green lights at neighboring intersections (the so-called "phase shift"). If one would systematically vary all these parameters for all traffic lights in the city, there would be so many parameter combinations to assess that the optimization could not be done in real time. The optimization problem is just too demanding.

Therefore, a typical approach is to operate each intersection in a periodic way and to synchronize these cycles as much as possible, in order to create a "green wave". This approach significantly constrains the search space of considered solutions, but the optimization task may still not be solvable in real time. Due to these computational constraints, traffic-light control schemes are usually optimized offline for "typical" traffic flows, and subsequently applied during the corresponding time periods (for example, on Monday mornings between 10am and 11am, or on Friday afternoons between 3pm and 4pm, or after a soccer game). In the best case, these schemes are subsequently adapted to match the actual traffic situation at any given time, by extending or shortening the green phases. But the order in which the roads at any intersection get a green light (i.e. the switching sequence) usually remains the same.

Unfortunately, the efficiency of even the most sophisticated top-down optimization schemes is limited. This is because real-world traffic conditions vary to such a large extent that the typical (i.e. average) traffic flow at a particular weekday, hour, and place is not representative of the actual traffic situation at any particular place and time. For example, if we look at the number of cars behind a red light, or the proportion of vehicles turning right, the degree to which these factors vary in space and time is approximately as large as their average value.

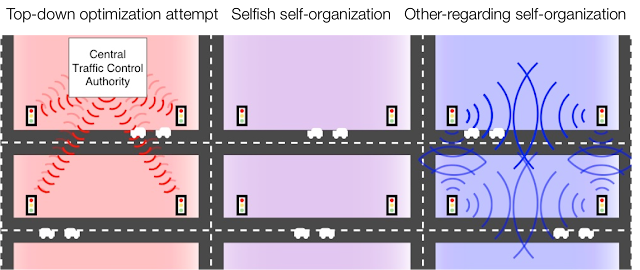

So how close to optimal is the pre-planned traffic light control scheme really? Traditional top-down optimization attempts based on a traffic control center produce an average vehicle queue, which increases almost linearly with the "capacity utilization" of the intersection, i.e. with the traffic volume. Let us compare this approach with two alternative ways of controlling traffic lights based on the concept of self-organization (see Fig. 6.4).[11] In the first approach, termed "selfish self-organization", the switching sequence of the traffic lights at each separate intersection is organized such that it strictly minimizes the travel times of the cars on the incoming road sections. In the second approach, termed "other-regarding self-organization", the local travel time minimization may be interrupted in order to clear long vehicle queues first. This may slow down some of the vehicles. But how does it affect the overall traffic flow? If there exists a faster-is-slower effect, as discussed in Information Box 6.1, could there be a "slower-is-faster effect", too?[12]

Figure 6.4: Illustration of three different kinds of traffic light control.[13]

How successful are the two self-organizing schemes compared to the centralized control approach? To evaluate this, besides locally measuring the outflows from the road sections, we assume that the inflows are measured as well (see Fig. 6.5). This flow information is exchanged between the neighboring intersections in order to make short-term predictions about the arrival times of vehicles. Based on this information, the traffic lights self-organize by adapting their operation to these predictions.

Figure 6.5: Illustration of the measurement of traffic flows arriving at a road section of interest (left) and departing from it (center).[14]

When the capacity utilization of the intersection is low, both of the self-organizing traffic light schemes described above work extremely well. They produce a traffic flow which is well-coordinated and much more efficient than top-down control. This is reflected by the shorter vehicle queues at traffic lights (compare the dotted violet line and the solid blue line with the dashed red line in Fig. 6.6). However, long before the maximum capacity of the intersection is reached, the average queue length gets out of hand because some road sections with low traffic volumes are not given enough green times. That's one of the reasons why we still use traffic control centers.

Figure 6.6: Illustration of the performance of a road intersection (quantified by the overall queue length), as a function of the utilization of its capacity (i.e. traffic volume).13

Interestingly, by changing the way in which intersections respond to local information about arriving traffic streams, it is possible to outperform top-down optimization attempts also at high capacity utilizations (see the solid blue line in Fig. 6.6). To achieve this, the objective of minimizing the travel time at each intersection must be combined with a second rule, which stipulates that any queue of vehicles above a certain critical length must be cleared immediately.[15] The second rule avoids excessive queues which may cause spill-over effects and obstruct neighboring intersections. Thus, this form of self-organization can be viewed as "other-regarding". Nevertheless, it produces not only shorter vehicle queues than "selfish self-organization", but shorter travel times on average, too.[16]

The above graph shows a further noteworthy effect: the combination of two bad strategies can be the best one! In fact, clearing the longest queue (see the grey dash-dotted line in Fig. 6.6) always performs worse than top-down optimization (dashed red line). When the capacity utilization of the intersection is high, strict travel time minimization also produces longer queues (see the dotted violet line). Therefore, if the two strategies (clearing long queues and minimizing travel times) are applied in isolation, they are not performing well at all. However, contrary to what one might expect, the combination of these two under-performing strategies, as it is applied in the other-regarding kind of self-organization, produces the best results (see the solid blue curve).

This is because the other-regarding self-organization of traffic lights flexibly takes advantage of gaps that randomly appear in the traffic flow to ease congestion elsewhere. In this way, non-periodic sequences of green lights may result, which outperform the conventional periodic service of traffic lights. Furthermore, the other-regarding self-organization creates a flow-based coordination of traffic lights among neighboring intersections. This coordination spreads over large parts of the city in a self-organized way through a favorable cascade effect.

A pilot study

After our promising simulation study, Stefan Lämmer approached the public transport authority in Dresden, Germany, to collaborate with them on traffic light control. So far, the traffic center applied a state-of-the-art adaptive control scheme producing "green waves". But although it was the best system on the market, they weren't entirely happy with it. Around a busy railway station in the city center they could either produce "green waves" of motorized traffic on the main arterials or prioritize public transport, but not both. The particular challenge was to prioritize public transport while so many different tram tracks and bus lanes cut through Dresden's highly irregular road network. However, if public transport (buses and trams) would be given a green light whenever they approached an intersection, this would destroy the green wave system needed to keep the motorized traffic flowing. Inevitably, the resulting congestion would spread quickly, causing massive disruption over a huge area of the city.

When we simulated the expected outcomes of the other-regarding self-organization of traffic lights and compared it with the state-of-the art control they used, we got amazing results.[17] The waiting times were reduced for all modes of transport, dramatically for public transport and pedestrians, but also somewhat for motorized traffic. Overall, the roads were less congested, trams and buses could be prioritized, and travel times became more predictable, too. In other words, the new approach can benefit everybody (see figure below) – including the environment. Thus, it is just consequential that the other-regarding self-organization approach was recently implemented at some traffic intersections in Dresden with amazing success (a 40 percent reduction in travel times). "Finally, a dream is becoming true", said one of the observing traffic engineers, and a bus driver inquired in the traffic center: "Where have all the traffic jams gone?"[18]

Figure 6.7: Improvement of intersection performance for different modes of transport achieved by other-regarding self-organization. The graph displays cumulative waiting times. Public transport has to wait 56 percent less, motorized traffic 9 percent less, and pedestrians 36 percent less.[19]

Lessons learned

The example of self-organized traffic control allows us to draw some interesting conclusions. Firstly, in a complex dynamical system, which varies a lot in a hardly predictable way and can't be optimized in real time, the principle of bottom-up self-organization can outperform centralized top-down control. This is true even if the central authority has comprehensive and reliable data. Secondly, if a selfish local optimization is applied, the system may perform well in certain circumstances. However, if the interactions between the system's components are strong (if the traffic volume is too high), local optimization may not lead to large-scale coordination (here: of neighboring intersections). Thirdly, an "other-regarding" distributed control approach, which adapts to local needs and additionally takes into account external effects ("externalities"), can coordinate the behavior of neighboring components within the system such that it produces favorable and efficient outcomes.

In conclusion, a centralized authority may not be able to manage a complex dynamical system well because even supercomputers may not have enough processing power to identify the most appropriate course of action in real time. Compared to this, selfish local optimization will fail due to a breakdown of coordination when interactions in the system become too strong. However, an other-regarding local self-organization approach can overcome both of these problems by considering externalities (such as spillover effects). This results in a system, which is both efficient and resilient to unforeseen circumstances.

Interestingly, there has recently been a trend in many cities towards replacing signal-controlled intersections with roundabouts. There is also a trend towards forgoing complex traffic signs and regulation in favor of more simple designs ("shared spaces"), which encourage people to voluntarily act in a considerate way towards fellow road users and pedestrians. In short, the concept of self-organization is spreading.

As we will see in later chapters of this book, many of the conclusions drawn above are relevant to socio-economic systems too, because they are usually also characterized by competitive interests or processes which can't be simultaneously served. In fact, coordination problems occur in many man-made systems.

Industry 4.0: Towards smart, self-organizing production

About ten years ago, together with Thomas Seidel and others, we began to investigate how production plants could be designed to operate more efficiently.[20] We studied a packaging production plant in which bottlenecks occurred from time to time. When this happened, a jam of products waiting to be processed created a backlog and a growing shortfall in the number of finished products (see Fig. 6.7). We noticed that there are quite a few similarities with traffic systems.[21] For example, the storage buffers on which partially finished products accumulate are similar to the road sections on which vehicles accumulate. Moreover, the units which process products are akin to road junctions, and the different manufacturing lines are similar to roads. From this perspective, production schedules have a similar function to traffic light schedules, and the time it takes to complete a production cycle is analogous to travel and delay times. Thus, when the storage buffers are full, it is as if they suffer from congestion, and breakdowns in the machinery are like accidents. But modeling production is even more complicated than modeling traffic, as materials are transformed into other materials during the production process.

Figure 6.7: Schematic illustration of bottlenecks and jams forming in a production plant.[22]

Drawing on our experience with traffic, we devised an agent-based model for these production flows. Again, we focused on how local interactions can govern and potentially assist the flow of materials. We thought about equipping all of the machines and products with a small "RFID" computer chip. These chips have internal memory and the ability to communicate wirelessly over short distances. RFID technology is already widely used in other contexts, such as the tagging of consumer goods. But it can also enable a product to communicate with other products and with machines in the vicinity (see Fig. 6.8). For example, a product could indicate that it had been delayed and thus needed to be prioritized, requiring a kind of over-taking maneuver. Products could further select between alternative routes, and tell the machines what needed to be done with them. They could also cluster together with similar products to ensure efficient processing. As the Internet of Things spreads, such self-organization approaches can now be easily implemented by using measurement sensors which communicate in a wireless way. Such automated production processes are known under the label "Industry 4.0".

Figure 6.8: Communication of autonomous units with other units, conveyor belts and machines in self-organizing production ("Industry 4.0").[23]

But we can go one step further. In the past, designing a good factory layout was a complicated, time-consuming and expensive process, which was typically performed in a top-down way. Compared to this, self-organization based on local interactions between a system's components (here: products and machines) is again a superior approach. The distributed control approach used in the agent-based computer simulation discussed above has a phenomenal advantage: it makes it easy to test different factory layouts without having to specify all details of the manufacturing plant. All elements of a factory, such as machines and transportation units, can be easily combined to create various variants of the entire production process. These elements could be just "clicked together" (or even combined by an "evolutionary algorithm"). After this, the possible interactions between the elements of a production plant could be automatically specified by the simulation software. Therefore, the machines immediately know what to do, because the necessary instructions are transmitted by the products.[24] In such a way, it becomes easy to test many different factory layouts and to explore which solution is most efficient and most resilient to disruption.

In the future, this concept could be even further extended. If we consider that recessions are like traffic jams in the world economy, and that capital or product flows are sometimes obstructed or delayed, could we use real-time information about the world's supply networks to minimize economic disruptions? I think this is actually possible. If we had access to data detailing supply chains worldwide, we could try to build an assistance system to reduce both overproduction and underproduction, and with this, economic recessions too.

Making the "invisible hand" work

As we have seen above, the self-organization of traffic flow and production can be a very successful approach if a number of conditions are fulfilled. Firstly, the system's individual components need to be provided with suitable real-time information. Secondly, there needs to be prompt feedback, which means that the information should elicit a timely and suitable response. Thirdly, the incoming information must determine how the interaction should be modified.[25]

Considering this, would a self-organizing society be possible? For hundreds of years people have been fascinated by the self-organization of social insects such as ants, bees and termites. A bee hive, for example, is an astonishingly differentiated, complex and well-coordinated social system, even though there is no hierarchical chain of command. No bee orchestrates the actions of the other bees. The queen bee simply lays eggs and all other bees perform their respective roles without being told so.

Inspired by this, Bernard Mandeville’s The Fable of Bees (1714) argues that actions driven by selfish motivations can create collective benefits. Adam Smith's concept of the "invisible hand" conveys a similar idea. According to this, the actions of people would be invisibly coordinated in a way that automatically improves the state of the economy and of society. Behind this worldview there seems to be a belief in some sort of divine order.[26]

However, in the wake of the recent financial and economic crisis, the assumption that complex systems will always automatically produce the best possible outcomes has been seriously questioned. Phenomena such as traffic jams and crowd disasters also demonstrate the dangers of having too much faith in the "invisible hand". The same applies to "tragedies of the commons" such as the overuse of resources, discussed in the next chapter.

Yet, I believe that, three hundred years after the principle of the invisible hand was postulated, we can finally make it work. Whether self-organization in a complex dynamical system ends in success or failure largely depends on the interaction rules between a system's components. So, we need to make sure that the system components suitably respond to real-time information in such a way that they produce a favorable pattern, property, or functionality by means of self-organization. The Internet of Things is the enabling technology that can now provide us with the necessary real-time data, and complexity science can teach us how to use this data.

Information technologies to assist social systems

I have shown above that self-organizing traffic lights can outperform the top-down control of traffic flows, if suitable real-time feedbacks are applied. On freeways, traffic congestion can be reduced by adaptive cruise control systems, which locally modify the interactions between cars. In other words, skillful "mechanism design" can support favorable self-organization.

But these are technological systems. Could we also build tools to assist social systems? Yes, we can! Sometimes, the design of social mechanisms is challenging, but sometimes it is easy. Imagine trying to share a cake fairly. If social norms allow the person who cuts the cake to take the first piece, this will often be bigger than the others. If he or she should take the last piece, however, the cake will probably be distributed in a much fairer way. Therefore, alternative sets of rules that are intended to serve the same goal (such as cutting a cake) may result in completely different outcomes.

As Information Box 6.2 illustrates, it is not always easy to be fair. Details of the interactions matter a lot. But with the right set interaction rules, we can, in fact, create a better world. In the following, we will discuss how the social mechanisms embedded in our culture can make an important difference and how one can support cooperation and social order in situations where an unfavorable outcome would otherwise result.

INFORMATION BOX 6.1: Faster-is-slower effect

Figure 6.9: Illustration of the faster is slower-effect on a freeway with a bottleneck, which is created by entering vehicles. The "boomerang effect" implies that a small initial vehicle platoon grows until it changes its propagation direction and returns to the location of the entry lane, causing the free traffic flow on the freeway to break down and to produce an oscillatory form of congestion.[27]

One interesting traffic scenario to study is a multi-lane freeway with a bottleneck created by an entry lane through which additional vehicles join the freeway. The following observations are made: When the density of vehicles is low, even large disruptions (such as large groups of cars joining the freeway) do not have lasting effects on the resulting traffic flow. In sharp contrast, when the density is above 30 vehicles per kilometer and lane or so, even the slightest variation in the speed of a vehicle can cause free traffic flow to break down, resulting in a "phantom traffic jam" as discussed before. Furthermore, when the density of vehicles is within the so-called "bistable" or "metastable" density range just before traffic becomes unconditionally unstable, small disruptions have no lasting effect on traffic flow, but disruptions larger than a certain critical size (termed the "critical amplitude") cause a traffic jam. Therefore, this density range may produce different kinds of traffic patterns, depending on the respective "history" of the system.

This can lead to a counter-intuitive behavior of traffic flow (see picture above). Imagine the traffic flow on a freeway stretch with an entry lane is smooth and "metastable", i.e. insensitive to small disruptions, while it is sensitive to big ones. Now suppose that the density of vehicles entering this stretch of freeway is significantly reduced for a short time. One would expect that traffic will flow even better, but it doesn't. Instead, vehicles accelerate into the area of lower density and this can trigger a traffic jam! This breakdown of the traffic flow, which is caused by faster driving, is known as the "faster-is-slower effect".

How does this effect come about? Firstly, the local disruption in the vehicle density changes its shape while the vehicles travel along the freeway. This produces a vehicle platoon (i.e. a cluster of vehicles related with a locally increased traffic density). This platoon moves forward and grows over time, but it eventually passes the location of the entry lane. Therefore, it seems obvious that it would finally leave the freeway stretch under consideration. Conversely however, at a certain point in time, the cluster of vehicles grows so big that it suddenly changes its propagation direction, meaning that it moves backwards rather than forwards. This is called the "boomerang effect". The effect occurs because the vehicles in the cluster are temporarily stopped when the cluster grows so big that it becomes a traffic jam. At the front of the cluster, vehicles move out of the traffic jam, while new vehicles join the traffic jam at the end. In combination, this makes the traffic jam move backwards until it eventually reaches the location of the entry lane (at x=0 km). When this happens, the cars joining the freeway from the entry lane are disrupted to an extent that lets the traffic flow upstream break down. This causes a long queue of vehicles, which continues to grow and produces a stop-and-go pattern. Even though the road could theoretically handle the overall traffic flow, the cars interact in a way that causes a reduction in the capacity of the freeway. The effective capacity is then given by the number of cars exiting the traffic jam, which is about 30 percent below the maximum traffic flow that the freeway can support!

INFORMATION BOX 6.2: The challenge of fairness

If there is a shortage of basic resources such as food, water or energy, distributional fairness becomes particularly important. Otherwise, violent conflict for scarce resources might break out. But it is not always easy to be fair. Together with Rui Carvalho, Lubos Buzna and three others,[28] I therefore investigated the problem of distributional fairness for the case of natural gas, which is transported through pipelines. The proportion of a pipeline that is used to serve various different destinations can be visualized in a pie chart (i.e. similar to cutting a cake). But in the case of natural gas supply for various cities and countries, the problem of distributional fairness requires one to cut several cakes at the same time. Given the multiple constraints set by the capacities of the pipelines, it is usually impossible to achieve perfect fairness everywhere. Therefore, it is often necessary to make a compromise, and it is actually a difficult mathematical challenge to find a good one.

Surprisingly, the constraints implied by the pipeline capacities can be particularly problematic if less gas is transported overall, as it might happen when one of the source regions does not deliver for geopolitical or other reasons. Then it is necessary to redistribute gas from other source regions, in order to maintain gas deliveries for everyone. Despite the smaller volumes of gas delivery, this will often lead to pipeline congestion problems, as the pipeline network was not designed to be used in this way. However, our study could show that it is still possible to achieve a resilient gas supply, if a fairness-oriented algorithm inspired by the Internet routing protocol is applied.

[1] The diversity of approaches and the plurality of goal functions is an essential aspect for complex economies and societies to thrive. This and the conscious decision what to engage in and with whom distinguishes assisted self-organization from the top-down nudging approach discussed before.

[2] Reprinted from D. Helbing et al. (2009) Theoretical vs. empirical classification and prediction of congested traffic states. Eur. Phys. J. B 69, 583-598, with kind permission of Springer publishers

[3] D. Helbing, An Analytical Theory of Traffic Flow, a selection of articles reprinted from European Journal of Physics B, see http://www.researchgate.net/publication/277297387_An_Analytical_Theory_of_Traffic_Flow; see also D. Helbing (2001) Traffic and related self-driven many-particle systems. Reviews of Modern Physics 73, 1067-1141.

[4] A. Kesting, M. Treiber, M. Schönhof, and D. Helbing (2008) Adaptive cruise control design for active congestion avoidance. Transportation Research C 16(6), 668-683 ; A. Kesting, M. Treiber, and D. Helbing (2010) Enhanced intelligent driver model to access the impact of driving strategies on traffic capacity. Phil. Trans. R. Soc. A 368(1928), 4585-4605

[5] I would like to thank Martin Treiber for providing this graphic.

[7] A. Kesting, M. Treiber, and D. Helbing (2010) Connectivity statistics of store-and-forward intervehicle communication. IEEE Transactions on Intelligent Transportation Systems 11(1), 172-181.

[8] D. Helbing and P. Molnár (1995) Social force model for pedestrian dynamics. Physical Review E 51, 4282-4286

[9] D. Helbing, S. Lämmer, and J.-P. Lebacque (2005) Self-organized control of irregular or perturbed network traffic. Pages 239-274 in: C. Deissenberg and R. F. Hartl (eds.) Optimal Control and Dynamic Games (Springer, Dordrecht)

[10] S. Lämmer (2007) Reglerentwurf zur dezentralen Online-Steuerung von Lichtsignalanlagen in Straßennetzwerken (PhD thesis, TU Dresden)

[11] S. Lämmer and D. Helbing (2008) Self-control of traffic lights and vehicle flows in urban road networks. Journal of Statistical Mechanics: Theory and Experiment, P04019, see http://iopscience.iop.org/1742-5468/2008/04/P04019; S. Lämmer, R. Donner, and D. Helbing (2007) Anticipative control of switched queueing systems, The European Physical Journal B 63(3) 341-347; D. Helbing, J. Siegmeier, and S. Lämmer (2007) Self-organized network flows. Networks and Heterogeneous Media 2(2), 193-210; D. Helbing and S. Lämmer (2006) Method for coordination of concurrent processes for control of the transport of mobile units within a network, Patent WO/2006/122528

[12] C. Gershenson and D. Helbing, When slower is faster, see http://arxiv.org/abs/1506.06796; D. Helbing and A. Mazloumian (2009) Operation regimes and slower-is-faster effect in the control of traffic intersections. European Physical Journal B 70(2), 257–274

[13] Reproduced from D. Helbing (2013) Economics 2.0: The natural step towards a self-regulating, participatory market society. Evol. Inst. Econ. Rev. 10, 3-41, with kind permission of Springer Publishers

[14] Reproduction from S. Lämmer (2007) Reglerentwurf zur dezentralen Online-Steuerung von Lichtsignalanlagen in Straßennetzwerken (Dissertation, TU Dresden) with kind permission of Stefan Lämmer, accessible at

[15] This critical length can be expressed as a certain percentage of the road section.

[16] Due to spill-over effects and a lack of coordination between neighboring intersections, selfish self-organization may cause a quick spreading of congestion over large parts of the city analogous to a cascading failure. This outcome can be viewed as a traffic-related "tragedy of the commons", as the overall capacity of the intersections is not used in an efficient way.

[17] S. Lämmer and D. Helbing (2010) Self-stabilizing decentralized signal control of realistic, saturated network traffic, Santa Fe Working Paper No. 10-09-019, see http://www.santafe.edu/media/workingpapers/10-09-019.pdf ; S. Lämmer, J. Krimmling, A. Hoppe (2009) Selbst-Steuerung von Lichtsignalanlagen - Regelungstechnischer Ansatz und Simulation. Straßenverkehrstechnik 11, 714-721

[18] Latest results from a real-life test can be found here: S. Lämmer (2015) Die Selbst-Steuerung im Praxistest, see http://stefanlaemmer.de/Publikationen/Laemmer2015.pdf

[19] Adapted reproduction from S. Lämmer and D. Helbing (2010) Self-stabilizing decentralized signal control of realistic, saturated network traffic, Santa Fe Working Paper No. 10-09-019, see

[20] T. Seidel, J. Hartwig, R. L. Sanders, and D. Helbing (2008) An agent-based approach to self-organized production. Pages 219–252 in: C. Blum and D. Merkle (eds.) Swarm Intelligence Introduction and Applications (Springer, Berlin), see http://arxiv.org/abs/1012.4645

[21] D. Helbing (1998) Similarities between granular and traffic flow. Pages 547-552 in: H. J. Herrmann, J.-P. Hovi, and S. Luding (eds.) Physics of Dry Granular Media (Springer, Berlin); K. Peters, T. Seidel, S. Lämmer, and D. Helbing (2008) Logistics networks: Coping with nonlinearity and complexity. Pages 119–136 in: D. Helbing (ed.) Managing Complexity: Insights, Concepts, Applications (Springer, Berlin); D. Helbing, T. Seidel, S. Lämmer, and K. Peters (2006) Self-organization principles in supply networks and production systems. Pages 535–558 in: B. K. Chakrabarti, A. Chakraborti, and A. Chatterjee (eds.) Econophysics and Sociophysics - Trends and Perspectives (Wiley, Weinheim); D. Helbing and S. Lämmer (2005) Supply and production networks: From the bullwhip effect to business cycles. Page 33–66 in: D. Armbruster, A. S. Mikhailov, and K. Kaneko (eds.) Networks of Interacting Machines: Production Organization in Complex Industrial Systems and Biological Cells (World Scientific, Singapore)

[22] Reproduction from T. Seidel et al. (2008) An agent-based approach to self-organized production, in: C. Blum and D. Merkle (eds.) Swarm Intelligence (Springer, Berlin), with kind permission of the publisher

[23] I would like to thank Thomas Seidel for providing this graphic.

[24] In this way, the local interactions and exchange of information between agents (here, the products and machines) again creates something like collective intelligence.

[25] In later chapters, I will discuss in detail how to gather such information and how to find suitable interaction rules.

[26] This idea is still present in today's neo-liberalism. In his first inaugural address, Ronald Reagan, for example, said: "In this present crisis, government is not the solution to our problem; government is the problem. From time to time we've been tempted to believe that society has become too complex to be managed..."

[27] Reproduction from D. Helbing, I. Farkas, D. Fasold, M. Treiber, and T. Vicsek (2002)

Critical discussion of ''synchronized flow'' Simulation of pedestrian evacuation, and optimization of production processes, in M. Fukui, Y. Sugiyama, M. Schreckenberg, and D.E. Wolf (eds.) Traffic and Granular Flow '01 (Springer, Berlin), pp. 511-530, with kind permission of the publishers

[28] R. Carvalho, L. Buzna, F. Bono, M. Masera, D. K. Arrowsmith, and D. Helbing (2014) Resilience of natural gas networks during conflicts, crises and disruptions. PLOS ONE, 9(3), e90265

No comments:

Post a Comment

Note: only a member of this blog may post a comment.