Showing posts with label Internet of Things. Show all posts

Showing posts with label Internet of Things. Show all posts

Sunday, 7 April 2024

GESELLSCHAFT 5.0 UND INTERNET OF BODIES: WAS KOMMT DA AUF UNS ZU?

Wenn Informationstechnologie mit Bio-, Gen-, Neuro-, und Nanotechnologie verschmilzt, ist irgendwann nichts mehr so, wie es einmal war. Das birgt riesige Chancen und noch größere Risiken – einschließlich der Möglichkeit, das Menschsein und die Gesellschaft für immer zu verändern. Das will gut überlegt sein!

Seit einigen Jahren wird verkündet: Wir sind auf dem Weg in die Gesellschaft 5.0.[1] Nach der Jäger- und Sammlergesellschaft, der Gesellschaft 1.0, haben wir die Agrargesellschaft (die Gesellschaft 2.0) sowie die Industriegesellschaft (Gesellschaft 3.0) hinter uns gelassen. Derzeit befinden wir uns vermeintlich noch in der Informationgesellschaft (Gesellschaft 4.0). Doch obwohl die digitale Transformation uns alles abverlangt, steht uns die nächste Revolution bereits bevor! Eine Veränderung, die so riesig ist wie jene drei zuvor? Wovon ist da die Rede?

Die Gesellschaft 5.0 werde das Ergebnis der vierten Industriellen Revolution sein, so kann man lesen,[2] und diese sei gekennzeichnet durch Künstliche Intelligenz, Internet der Dinge, Blockchain Technologie und fortschrittliche Robotik. Ok, das sehen wir vielleicht noch ein. Aber dann ist da auch die Rede von Neurotechnologie und Gen-Editierung[3] – womöglich nicht nur bei Pflanzen.

Um die komplette Tragweite davon zu verstehen, muss man doch ein wenig weiter ausholen. Denn was sich da anbahnt, ist wahrhaft revolutionär. Ja, es scheint geradezu Science-Fiction zu sein. Dennoch ist es bereits viel realer, als die meisten von uns ahnen. Und es birgt jede Menge Sprengstoff für Demokratie und Grundrechte sowie jede Menge militärisches und Missbrauchs-Potenzial – auch Dual Use genannt.[4]

Im folgenden möchte ich daher die revolutionären gesellschaftlichen Potenziale und Risiken der sogenannten konvergierenden Technologien skizzieren. Dabei geht es um die Verbindung von Informationtechnologien mit Bio-, Gen-, Nano- und Neurotechnologien, sowie um das “Internet der Körper” (das “Internet of Bodies”) bzw. das Internet von Allem (das “Internet of Everything”). Was ist damit gemeint?

Beginnen wir mit dem “Internet der Dinge” (dem “Internet of Things”). Hier geht es um die Möglichkeit, allerlei Mess-Sensoren für allerlei Umweltfaktoren mit dem Internet zu verbinden (von Lärm über Temperatur und Luftverschmutzung bis CO2, Lichtintensität und Radioaktivität). Inzwischen gibt es sogar mehr von diesen Sensoren als Menschen auf unserem Planeten. Mit der technologischen Entwicklung werden sie immer billiger und immer kleiner – bis sie zum Teil die Größe von Nanoteilchen erreichen. Das sind Teilchen von bis zu 100 Nanometer Durchmesser, also kleiner als ein zehntausendstel Millimeter – vergleichbar mit der Größe eines Virus und mit menschlichen Augen nicht zu sehen.

Damit passen sie auch in unsere Zellen! Eingebettet in unseren Körper, könnte man sie also nutzen, um Daten auszulesen, die unter anderem für unsere persönlichen Gesundheit relevant sind. In der Tat spielen sie potenziell eine Rolle für „personalised health“ oder „high precision medicine“, also die angestrebte Personalisierung von medizinischen Behandlungen. Diese wären dann viel wirksamer, so hofft man, und hätten weniger Nebenwirkungen. Überdies könnte man viele Krankheiten schon im Anfangsstadium erkennen – und behandeln – bevor sie ausgebrochen sind und schweren Schaden angerichtet haben. Schon träumen manche vom ewigen Leben, oder zumindest von einer erheblichen Lebensverlängerung.

Was sich so traumhaft anhört, basiert auf dem sogenannten „Internet der Körper“. „The Internet of Bodies is here“, verkündete 2020 das World Economic Forum.[5] Und das könne unser Leben fundamental verändern. In wissenschaftlichen Kreisen spricht man eher vom „Internet der (Bio-)Nano-Dinge“. Und obwohl es inzwischen schon Nanoroboter und Computerchips von der Grösse eines Sandkorns gibt, ist die zum Teil verbreitete Befürchtung, dass wir nun bald gechipt würden, irreführend. Die meisten Nanoteilchen sind passiv und sehr einfach; sie senden Daten nicht von selbst. Man kann sie sich eher wie ein Kontrastmittel vorstellen, mit deren Hilfe man Körperstrukturen und -prozesse auslesen kann – durch einen Prozess, der physikalisch als Streuung bekannt ist. Dazu eignet sich zum Teil auch 5G-Strahlung und 6G,[6] die nächste Mobilfunkgeneration also, wobei man das Smartphone als Relais-Station zur Sammlung und Weitergabe der Daten nutzen kann. Denkbar ist auch die Nutzung geeigneter Lichtstrahlung. Im Prinzip könnte damit auch eine elektronische Identität („eID“) kreieren, bei der unser Körper quasi das Passwort ist.

Nun aber wird es spannend: denn man wird nicht nur Körperdaten auslesen, sondern auch körperliche Prozesse beeinflussen können. Beispielsweise lassen sich bestimmte genetische Prozesse an- oder ausschalten.[7] „Die Optogenetik erforscht Wege, genetisch veränderte Nervenzellen über Lichtimpulse statt durch Elektrizität anzuregen“, war bereits 2015 in einer ACATECH-Bewertung der „Innovationspotenziale der Mensch-Maschine-Interaktion“ zu lesen.[8]

Versuchen wir uns nun einmal vorzustellen, was durch Kombination von Nano-, Bio-, und Gentechnologie mit Quanten-Technologie und Künstlicher Intelligenz noch alles möglich werden könnte. Beispielsweise sind „kognitive Verbesserungen“ („cognitive enhancement“) seit mehr als 10 Jahren ein erklärtes Ziel.[9] In einem Fachartikel über „Nanotools for Neuroscience and Brain Activity Mapping“ konnte man 2013 schon lesen:[10] „Nanowissenschaft und Nanotechnologie sind in der Lage, ein reichhaltiges Instrumentarium neuartiger Methoden zur Erforschung der Gehirnfunktion bereitzustellen, indem sie die gleichzeitige Messung und Manipulation der Aktivität von Tausenden oder gar Millionen von Neuronen ermöglichen. Wir und andere bezeichnen dieses Ziel als das Brain Activity Mapping Project.“

Im selben Jahr hiess es, dass die Indexierung des menschlichen Hirns dazu führen wird, dass ich die Datenmenge bald alle 12 Stunden verdoppeln werde[11] – laut einem führenden Technologieunternehmen. Das war kurz bevor Edward Snowden unsere Aufmerksamkeit auf die Probleme der flächendeckenden Internet-Überwachung lenkte. Damit waren Themen wie das Auslesen von Gedanken, die technologisch ermöglichte Gedankenübertragung („technologische Telepathie“) oder die Gedankensteuerung erst einmal weit weg – obwohl man bereits an ihnen forschte. Und damit verharrten wir in der Vorstellung, „wer nichts zu verbergen hat, hat auch nichts zu befürchten“, während man in Wirklichkeit bereits damit begann, die ganze Welt auf den Kopf zu stellen.

Wo die einst von der US Defense Advanced Research Projects Agency (DARPA) angestossene Entwicklung heute genau steht, ist auf Basis öffentlicher Informationen schwer zu sagen. Manches deutet allerdings darauf hin, dass zukünftige Smartphones zu Relaisstationen zwischen unserem Geist und Körper einerseits und dem satellliten-gestützten Internet andererseits werden könnten. Dabei sollen sich Smartphones einst durch Gedanken steuern lassen – und wahrscheinlich auch anders herum. So könnte vielleicht einst möglich werden, was man als „Hive Mind“ bezeichnet. Unsere Körper, unser Leben, unsere Gedanken würden ausgelesen werden, und das so gesammelte Wissen könnte weltweit zugänglich gemacht werden – soweit man es denn möchte. Es wäre zuzusagen eine hochskalierte Mammutversion von ChatGPT, die nicht nur mit allen Texten trainiert würde, sondern mit unseren Gedanken.

Für konvergierende Technologien wären viele Anwendungen denkbar – von neuen Lösungen für eine nachhaltige Zukunft bis zur Förderung der „Population Health“ oder gar der planetaren Gesundheit; von der Überwindung psychologischer Traumata und neurodegenerativer Erkrankungen bis zur Geburten- und Bevölkerungskontrolle. Militärisch würde das zielgenaue, personalisierte Töten möglich – an jedem Ort des Planeten und quasi unter der Nachweisgrenze. Angesichts der angespannten Weltlage, die nun oft als Notstand bezeichnet wird, mögen manche derartige Anwendungen für gerechtfertigt oder notwendig halten. Das entspricht nicht meiner Meinung. Aber wer soll Anwendungen konvergierender Technologien beurteilen – auch angesichts dessen, dass viele von ihnen unterhalb der Wahrnehmungsschwelle wirken können?

Damit Sie mich nicht falsch verstehen: ich will an dieser Stelle nicht behaupten, dass solche Technologien bereits missbräuchlich eingesetzt wurden. Aber was haben wir seit dem Nürnberger Kodex gelernt? Ich denke, wir sollten besser sehr genau darauf achten, wie Technologien verwendet werden, die so tief in menschliches Leben eingreifen können. Das wäre doch zu betonen. Denn schon 2021 wies eine Studie des Verteidigungs-Ministeriums zum Thema „Human Augmentation“ darauf hin, dass das Militär nicht warten wird, bis alle ethischen Fragen geklärt sind. Darüber hinaus habe die technische „Aufrüstung“ des Menschen im Prinzip bereits begonnen – und Maßnahmen, die man als Gentherapien bezeichnen könne, seien schon in der Pipeline.[12]

Der Mangel an Transparenz und das weitgehende Unwissen über diese „Zukunfts-Technologien“ müssen daher dringend überwunden werden. Denn sicher ist: konvergierende Technologien können nicht nur Segensbringer sein; sie machen uns auch maximal verwundbar.

So stellen sich denn viele Fragen: Können wir vertrauen, dass ein massen-überwachungs-basiertes, daten-getriebenes, KI-gesteuertes, „gott-ähnliches“, planetares Kontroll-System und jene, die es nutzen, wirklich wohlwollend handeln würden? Wie sollte man das überhaupt messen oder definieren? Was wäre schlimmer: ein mächtiges System, das missbraucht werden könnte, oder eines, das nicht richtig funktioniert?

Wie sollen wir die Kontrolle über unser Leben behalten, wenn die Daten, mit denen man unser Denken, Fühlen und Verhalten zunehmend steuern kann, nicht unserer Kontrolle unterliegen? Wie stellen wir sicher, dass ein System, das vielleicht vorübergehend dazu dienen soll, eine kritische Weltlage besser zu bewältigen, die besondere menschliche Fähigkeit des freien Denkens nicht für immer eliminiert? Wie vermeiden wir, dass eine Technokratie und ein neues Herrschaftssystem entstehen, mit dem wir vielleicht nicht einverstanden sind und das mutmaßlich zu mächtig wäre, als dass wir es jemals wieder selber ändern könnten? Wie stellen wir die politische Kontrolle sicher, wenn ein solches System an Parlament und Rechtsstaat vorbei agieren könnte, ja gewissermassen ein paralleles, algorithmen-basiertes Betriebssystem unserer Gesellschaft etablieren könnte? Fragen über Fragen, die unsere dringende Aufmerksamkeit erfordern.

Jedenfalls wurden die ethischen Probleme im Zusammenhang mit solchen Technologien bisher bei weitem noch nicht ausreichend diskutiert. Die Anwendung von Gen-Editierung, KI und Nanotechnologie in der Medizin bietet zwar immense Vorteile für die personalisierte Medizin, doch wirft sie auch fundamentale Fragen des Datenschutzes und der Persönlichkeitsrechte auf. Die Verantwortlichkeit für den Einsatz der Technologien (d.h. die “Accountability”) muss geklärt werden. Der Schutz unserer Grundrechte, einschliesslich der informationellen Selbstbestimmung, muss so bald wie möglich sichergestellt werden. Denn im Grunde genommen liessen sich fast alle unsere Grundrechte aushebeln.

Daneben ist es essentiell zu gewährleisten, dass unsere Gesellschaft über den Einsatz und die Grenzen dieser Technologien frei – und ohne Manipulation – entscheiden kann. Dabei müssen wir unter anderem die Auswirkungen auf die Arbeitswelt, den Schutz der Privat- und Intimsphäre, sowie die Autonomie des Einzelnen bedenken, genauso wie den Umgang mit der individuellen kreativen Lebensleistung und persönlichen Identität. (So wäre beispielsweise das Recht am eigenen Bild analog auf sogenannte “Digitale Zwillinge” anzuwenden.)

Internationale Richtlinien und transparente Governance-Strukturen könnten helfen, das Potenzial der Technologien zu nutzen, ohne die Freiheit und die Rechte des Individuums zu gefährden. Wir benötigen daher eine breite öffentliche Debatte und eine gesetzliche Regulierung, etwa den Schutz von Neurorechten, um einen Missbrauch solcher Technologien zu verhindern und ihre Vorteile gerecht zu verteilen.

[1] https://bluenotes.anz.com/posts/2019/02/the-future-of-japans-society-5-0

[2] https://www.trendreport.de/die-digitale-zukunft-ist-die-society-5-0/

[3] https://en.wikipedia.org/wiki/Fourth_Industrial_Revolution

[4] https://link.springer.com/article/10.1007/s10676-024-09756-8

[5] https://www.weforum.org/reports/the-internet-of-bodies-is-here-tackling-new-challenges-of-technology-governance

[6] https://www.mdpi.com/2076-3417/11/17/8117

[7] https://www.nature.com/articles/s41587-021-01112-1

[8] https://www.acatech.de/publikation/innovationspotenziale-der-mensch-maschine-interaktion/

[9] https://www.heise.de/tp/features/The-Age-of-Transhumanist-Politics-Has-Begun-3371228.html?seite=all

[10] https://pubs.acs.org/doi/10.1021/nn4012847

[11] https://www.industrytap.com/knowledge-doubling-every-12-months-soon-to-be-every-12-hours/3950

[12]https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/986301/Human_Augmentation_SIP_access2.pdf

Thursday, 28 March 2019

Using The Wisdom Of Crowds To Make Cities Smarter

How could networks of innovative cities contribute to the solution of humanity’s existential problems?

Given the on-going digital revolution and our present-day sustainability challenges, we have to reinvent the way cities are operated. We propose that the requirement of organizing societies in a more resilient way implies the need for more decentralized solutions, based on digitally assisted self-organization, and that this concept is also compatible with sustainability requirements and stronger democratic participation.The project by Prof. Dirk Helbing and his Computational Social Science team will investigate, whether such a decentralized, participatory approach could compete with a fully centralized approach in terms of efficiency and sustainability, or perform even better than that. This requires in particular to figure out, how distributed co-creation processes can be coordinated and lifted to a professional level in a scalable way.

The main questions of the project are: How could more participatory smart cities work, and how can they meet the requirements of being more efficient, sustainable and resilient? What are their risks and benefits compared with centralized approaches? How could digital societies fitting our culture, for example, based on values such as freedom, equality and solidarity (liberté, égalité, fraternité) look like, and what performance can be expected from them?

The project brings together two research directions: first, the automation of mobility solutions based on the Internet of Things and Machine Learning approaches, as they have been pursued within the “smart cities” paradigm and, second, novel collaborative approaches as they have been recently discussed under labels such as participatory resilience, digital democracy, City Olympics, open source urbanism, and the “socio-ecological finance system”.

In German:

Wie „Smart Cities“ mit Schwarmintelligenz noch besser werden können

Wie können Netzwerke innovativer Städte zur Lösung der existentiellen Menschheitsprobleme beitragen? Angesichts der fortschreitenden digitalen Revolution und unserer heutigen Nachhaltigkeitsherausforderungen müssen wir die Art und Weise, wie Städte organisiert werden, neu erfinden. Wir schlagen vor, dass die Notwendigkeit, die Gesellschaft krisenfester zu gestalten, stärker dezentralisierte Lösungen auf der Grundlage digital unterstützter Selbstorganisation erfordert, und dass dieses Konzept auch mit den Nachhaltigkeitsanforderungen und einer stärkeren demokratischen Beteiligung vereinbar ist.Das Projekt von Prof. Dirk Helbing und seinem Computational Social Science Team soll untersuchen, ob ein derart dezentraler, partizipativer Ansatz mit einem vollständig zentralisierten Ansatz in Bezug auf Effizienz und Nachhaltigkeit konkurrieren kann oder sogar noch besser abschneidet. Dies erfordert insbesondere herauszufinden, wie verteilte Prozesse der Ko-Kreation skalierbar koordiniert und auf professionelles Niveau gebracht werden können.

Die Hauptfragen des Projekts lauten: Wie könnten stärker partizipative Smart Cities funktionieren und wie können sie die Anforderungen erfüllen, effizienter, nachhaltiger und krisenfester zu sein? Welche Risiken und Vorteile bestehen im Vergleich zu zentralisierten Ansätzen? Wie können digitale Gesellschaften aussehen, die zu unserer Kultur passen, also beispielsweise auf Werten wie Freiheit, Gleichheit und Solidarität (liberté, égalité, fraternité) basieren, und welche Leistungsfähigkeit kann von ihnen erwartet werden?

Das Projekt bringt zwei Forschungsrichtungen zusammen: erstens die Automatisierung von Mobilitätslösungen, die auf den Ansätzen des Internets der Dinge und des maschinellen Lernens beruhen, wie sie beim Ansatz von „Smart Cities“ („intelligenten Städte“) zum Einsatz kommen; zweitens neuartige kollaborative Ansätze, wie sie neuerdings unter Stichworten wie partizipative Resilienz, digitale Demokratie, Städte-Olympiaden, Open Source Stadtentwicklung und dem sozio-ökologischen Finanzsystem „Fin4“ diskutiert werden.

Wednesday, 28 March 2018

Internet of Things and Autonomous Driving – Centralized or Decentralized?

By Dirk Helbing (ETH Zurich/TU Delft/Complexity Science Hub Vienna)

Digital technologies – from cloud computing to Big Data, from Artificial Intelligence to cognitive computing, from robotics to 3D printing, from the Internet of Things to Virtual Reality, from blockchain technology to quantum computing – have opened up amazing opportunities for our future. The development of autonomous vehicles, for example, promises the next level of comfort and safety in mobility systems. At the same time, transport as a service allows for a new level of sustainability, as much less cars, parking lots and garages (and much less materials and energy required to build them) will be needed to offer the mobility we need. Nevertheless, the discussion, for example, about privacy, cybercrime, autonomous weapons, and “trolley problems” illustrates that, besides great opportunities, there are also major challenges and risks. Recently, not only in connection with Cambridge Analytica and Facebook, the public media have started to write about a “techlash” (i.e. a technological backlash), and engineering organizations such as the IEEE have started to work on frameworks such as “ethically aligned design”[1] and “value-sensitive design” or “design for values”.[2] For example, experts discuss about solutions such as “privacy by design” or “democracy by design”[3] (see Fig. 1).

|

Figure 1: Core issues to consider in digital democracy platforms |

One of the question raised in this connection is: should we organize the data-rich society of the future in a centralized or decentralized way? This also concerns the deployment of the 5G network in Europe. An increasing number of experts recommends the development and use of decentralized information technologies[4] due to increasing dangers of misuse and vulnerabilities (e.g. by means of hacking), drawbacks on democracy, and matters of resilience. In the following, I will shortly discuss economic, political, technological and health issues to consider.

- A uniform, Europe-wide 5G network would not be economic. Making autonomous driving dependent on it could considerably delay autonomous driving. Today, many areas in Europe do not have a 3G or a 4G net, yet. This also includes many railway connections and many major roads, even in economically leading countries such as Germany. Besides, there are many regions lacking a mobile phone signal at all. It would be very expensive and take many years to deploy a 5G network all over Europe, which could offer standardized and reliable services as they would be needed to operate all traffic. By the time the deployment would be completed, we would probably have the next generations of wireless technology already, and perhaps new mobility concepts, too.

- A Europe-wide control grid implies dangers to democracy. The developments in countries such as Turkey shows that powerful technologies can also be turned against people. A similar argument has recently been made about Facebook and various other IT companies, which have engaged in what is sometimes referred to as “surveillance capitalism” as well as in the manipulation of the opinions, emotions, decisions and behaviours of their users. It could be a political concern that technologies such as big nudging and neuromarketing aimed at manipulating peoples’ minds can be traced back to fascist projects and ideology. Moreover, it may raise concerns that some of the biggest companies harvesting Big Data across Europe have grown powerful during the Nazi regime or are linked to the military-industrial complex. Given that technologies originally developed for autocratic states and secret services are being applied to entire populations also in Western democracies,[5] concerns are certainly not pointless. Decentralized data control could counter related risks.

- If 5G would be deployed all over Europe as planned, this could imply health risks for hundreds of millions of people. Currently, we do not know enough what are the implications for health, if today’s radiation thresholds are exceeded or raised. In principle, as 5G is in the microwave spectrum, significant interaction of 5G radiation with biological matter, also the brain, cannot be sufficiently excluded. Hence, there may be undesirable side effects.

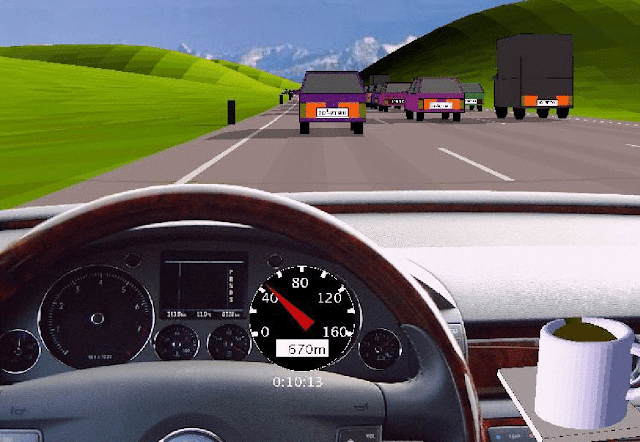

- Autonomous driving as well as the Internet of Things can be operated in a decentralized way such that the local organization or self-organization of systems is enabled via real-time feedback. This includes traffic assistant systems based on “mechanism design” to reduce congestion on freeways, which is done by changing interactions between cars, based on local measurements. Novel self-organizing traffic light control systems are also able to considerably improve traffic flows in cities based on local measurements and interactions. Both can even be operated without a control centre. The traffic situation around the corner is more difficult to handle, but solutions using relay stations or laser-based systems are being developed.[6]

In summary, given the above concerns, the current “techlash”, and the fact that a decentralized realization of autonomous driving and IoT applications is possible, Europe would be wise to engage into the “digitization 2.0”, oriented primarily at local empowerment and coordination. This calls, in particular, for technical solutions supporting informational self-determination (see Fig. 2). Data- and AI-based personal digital assistants could support people in taking better decision, in being more creative and innovative, and in coordinating each other and cooperating more successfully. They could also help us manage our personal data, namely who would have access to that what kinds of data and for what purposes. As trusted companies would get access to more data, the competition for data and trust would be expected to promote a trustable digital society. This would not only benefit autonomous driving.

|

Figure 2: Core issues to achieve informational self-determination. |

Appendix:

Digitally Assisted Self-Organization

(excerpt from my book “The Automation of Society Is Next”)

Avoiding traffic jams

Since the early days of computers, traffic engineers always sought ways to improve the flow of traffic. The traditional "telematics" approach to reduce congestion was based on the concept of a traffic control center that collects information from a lot of traffic sensors. This control center would then centrally determine the best strategy and implement it in a top-down way, by introducing variable speed limits on motorways or using traffic lights at junctions, for example. Recently, however, researchers and engineers have started to explore a different and more efficient approach, which is based on distributed control.

In the following, I will show that local interactions may lead to a favorable kind of self-organization of a complex dynamical system, if the components of the system (in the above example, the vehicles) interact with each other in a suitable way. Moreover, I will demonstrate that only a slight modification of these interactions can turn bad outcomes (such as congestion) into good outcomes (such as free traffic flow). Therefore, in complex dynamical systems, "interaction design", also known as "mechanism design", is the secret of success.

Assisting traffic flow

Some years ago, Martin Treiber, Arne Kesting, Martin Schönhof, and I had the pleasure of being involved in the development of a new traffic assistance system together with a research team of Volkswagen. The system we invented is based on the observation that, in order to prevent (or delay) the traffic flow from breaking down and to use the full capacity of the freeway, it is important to reduce disruptions to the flow of vehicles. With this in mind, we created a special kind of adaptive cruise control (ACC) system, where adjustments are made by a certain proportion of self-driving cars that are equipped with the ACC system. A traffic control center is not needed for this. The ACC system includes a radar sensor, which measures the distance to the car in front and the relative velocity. The measurement data are then used in real time to accelerate and decelerate the ACC car automatically. Such radar-based ACC systems already existed before. In contrast to conventional ACC systems, however, the one developed by us did not merely aim to reduce the burden of driving. It also increased the stability of the traffic flow and capacity of the road. Our ACC system did this by taking into account what nearby vehicles were doing, thereby stimulating a favorable form of self-organization in the overall traffic flow. This is why we call it a "traffic assistance system" rather than a "driver assistance system".

The distributed control approach adopted by the underlying ACC system was inspired by the way fluids flow. When a garden hose is narrowed, the water simply flows faster through the bottleneck. Similarly, in order to keep the traffic flow constant, either the traffic needs to become denser or the vehicles need to drive faster, or both. The ACC system, which we developed with Volkswagen many years before people started to talk about Google cars, imitates the natural interactions and acceleration of driver-controlled vehicles most of the time. But whenever the traffic flow needs to be increased, the time gap between successive vehicles is slightly reduced. In addition, our ACC system increases the acceleration of vehicles exiting a traffic jam in order to reach a high traffic flow and stabilize it.

Creating favorable collective effects

Most other driver assistance systems today operate in a "selfish" way. They are focused on individual driver comfort rather than on creating better flow conditions for everyone. Our approach, in contrast, seeks to obtain system-wide benefits through a self-organized collective effect based on "other-regarding" local interactions. This is a central feature of what I call "Social Technologies". Interestingly, even if only a small proportion of cars (say, 20 percent) are equipped with our ACC system, this is expected to support a favorable self-organization of the traffic flow.[7] By reducing the reaction and response times, the real-time measurement of distances and relative velocities using radar sensors allows the ACC vehicles to adjust their speeds better than human drivers can do it. In other words, the ACC system manages to increase the traffic flow and its stability by improving the way vehicles accelerate and interact with each other.

|

| Figure 3: Snapshot of a computer simulation of stop-and-go traffic on a freeway.[8] |

A simulation video we created illustrates how effective this approach can be.[9] As long as the ACC system is turned off, traffic flow develops the familiar and annoying stop-and-go pattern of congestion. When seen from a bird's-eye view, it is evident that the congestion originates from small disruptions caused by vehicles joining the freeway from an entry lane. But once the ACC system is turned on, the stop-and-go pattern vanishes and the vehicles flow freely.

In summary, driver assistance systems modify the interaction of vehicles based on real-time measurements. Importantly, they can do this in such a way that they produce a coordinated, efficient and stable traffic flow in a self-organized way. Our traffic assistance system was also successfully tested in real-world traffic conditions. In fact, it was very impressive to see how natural our ACC system drove already a decade ago. Since then, experimental cars have become smarter every year.

Cars with collective intelligence

A key issue for the operation of the ACC system is to discover where and when it needs to alter the way a vehicle is being driven. The right moments of intervention can be determined by connecting the cars in a communication network. Many cars today contain a lot of sensors that can be used to give them "collective intelligence". They can perceive the driving state of the vehicle (e.g. free or congested flow) and determine the features of the local environment to discern what nearby cars are doing. By communicating with neighboring cars through wireless car-to-car communication,[10] the vehicles can assess the situation they are in (such as the surrounding traffic state), take autonomous decisions (e.g. adjust driving parameters such as speed), and give advice to drivers (e.g. warn of a traffic jam behind the next curve). One could say, such vehicles acquire "social" abilities in that they can autonomously coordinate their movements with other vehicles.

Self-organizing traffic lights

Let's have a look at another interesting example: the coordination of traffic lights. In comparison to the flow of traffic on freeways, urban traffic poses additional challenges. Roads are connected into complex networks with many junctions, and the main problem is how to coordinate the traffic at all these intersections. When I began to study this difficult problem, my goal was to find an approach that would work not only when conditions are ideal, but also when they are complicated or problematic. Irregular road networks, accidents or building sites are examples of the types of problems, which are often encountered. Given that the flow of traffic in urban areas greatly varies over the course of days and seasons, I argue that the best approach is one that flexibly adapts to the prevailing local travel demand, rather than one which is pre-planned for "typical" traffic situations at a certain time and weekday. Rather than controlling vehicle flows by switching traffic lights in a top-down way, as it is done by traffic control centers today, I propose that it would be better if the actual local traffic conditions determined the traffic lights in a bottom-up way.

But how can self-organizing traffic lights, based on the principle of distributed control, perform better than the top-down control of a traffic center? Is this possible at all? Yes, indeed. Let us explore this now. Our decentralized approach to traffic light control was inspired by the discovery of oscillatory pedestrian flows. Specifically, Peter Molnar and I observed alternating pedestrian flows at bottlenecks such as doors.[11] There, the crowd surges through the constriction in one direction. After some time, however, the flow direction turns. As a consequence, pedestrians surge through the bottleneck in the opposite direction, and so on. While one might think that such oscillatory flows are caused by a pedestrian traffic light, the turning of the flow direction rather results from the build-up and relief of "pressure" in the crowd.

Could one use this pressure principle underlying such oscillatory flows to define a self-organizing traffic light control?[12] In fact, a road intersection can be understood as a bottleneck too, but one with flows in several directions. Based on this principle, could traffic flows control the traffic lights in a bottom-up way rather than letting the traffic lights control the vehicle flows in a top-down way, as we have it today? Just when I was asking myself this question, a student named Stefan Lämmer knocked at my door and wanted to write a PhD thesis.[13] This is where our investigations began.

How to outsmart centralized control

Let us first discuss how traffic lights are controlled today. Typically, there is a traffic control center that collects information about the traffic situation all over the city. Based on this information, (super)computers try to identify the optimal traffic light control, which is then implemented as if the traffic center were a "benevolent dictator". However, when trying to find a traffic light control that optimizes the vehicle flows, there are many parameters that can be varied: the order in which green lights are given to the different vehicle flows, the green time periods, and the time delays between the green lights at neighboring intersections (the so-called "phase shift"). If one would systematically vary all these parameters for all traffic lights in the city, there would be so many parameter combinations to assess that the optimization could not be done in real time. The optimization problem is just too demanding.

Therefore, a typical approach is to operate each intersection in a periodic way and to synchronize these cycles as much as possible, in order to create a "green wave". This approach significantly constrains the search space of considered solutions, but the optimization task may still not be solvable in real time. Due to these computational constraints, traffic-light control schemes are usually optimized offline for "typical" traffic flows, and subsequently applied during the corresponding time periods (for example, on Monday mornings between 10am and 11am, or on Friday afternoons between 3pm and 4pm, or after a soccer game). In the best case, these schemes are subsequently adapted to match the actual traffic situation at any given time, by extending or shortening the green phases. But the order in which the roads at any intersection get a green light (i.e. the switching sequence) usually remains the same.

Unfortunately, the efficiency of even the most sophisticated top-down optimization schemes is limited. This is because real-world traffic conditions vary to such a large extent that the typical (i.e. average) traffic flow at a particular weekday, hour, and place is not representative of the actual traffic situation at any particular place and time. For example, if we look at the number of cars behind a red light, or the proportion of vehicles turning right, the degree to which these factors vary in space and time is approximately as large as their average value.

So how close to optimal is the pre-planned traffic light control scheme really? Traditional top-down optimization attempts based on a traffic control center produce an average vehicle queue, which increases almost linearly with the "capacity utilization" of the intersection, i.e. with the traffic volume. Let us compare this approach with two alternative ways of controlling traffic lights based on the concept of self-organization (see Fig. 4).[14] In the first approach, termed "selfish self-organization", the switching sequence of the traffic lights at each separate intersection is organized such that it strictly minimizes the travel times of the cars on the incoming road sections. In the second approach, termed "other-regarding self-organization", the local travel time minimization may be interrupted in order to clear long vehicle queues first. This may slow down some of the vehicles. But how does it affect the overall traffic flow? If there exists a faster-is-slower effect, could there be a "slower-is-faster effect", too?[15]

|

Figure 4: Illustration of three different kinds of traffic light control.[16] |

How successful are the two self-organizing schemes compared to the centralized control approach? To evaluate this, besides locally measuring the outflows from the road sections, we assume that the inflows are measured as well (see Fig. 5). This flow information is exchanged between the neighboring intersections in order to make short-term predictions about the arrival times of vehicles. Based on this information, the traffic lights self-organize by adapting their operation to these predictions.

|

| Figure

5: Illustration of the measurement of traffic flows arriving at a road

section of interest (left) and departing from it (center).[17] |

When the capacity utilization of the intersection is low, both of the self-organizing traffic light schemes described above work extremely well. They produce a traffic flow which is well-coordinated and much more efficient than top-down control. This is reflected by the shorter vehicle queues at traffic lights (compare the dotted violet line and the solid blue line with the dashed red line in Fig. 6). However, long before the maximum capacity of the intersection is reached, the average queue length gets out of hand because some road sections with low traffic volumes are not given enough green times. That's one of the reasons why we still use traffic control centers.

|

| Figure 6: Illustration of the performance of a road intersection (quantified by the overall queue length), as a function of the utilization of its capacity (i.e. traffic volume).[18] |

Interestingly, by changing the way in which intersections respond to local information about arriving traffic streams, it is possible to outperform top-down optimization attempts also at high capacity utilizations (see the solid blue line in Fig. 6.6). To achieve this, the objective of minimizing the travel time at each intersection must be combined with a second rule, which stipulates that any queue of vehicles above a certain critical length must be cleared immediately.[19] The second rule avoids excessive queues which may cause spill-over effects and obstruct neighboring intersections. Thus, this form of self-organization can be viewed as "other-regarding". Nevertheless, it produces not only shorter vehicle queues than "selfish self-organization", but shorter travel times on average, too.[20]

The above graph shows a further noteworthy effect: the combination of two bad strategies can be the best one! In fact, clearing the longest queue (see the grey dash-dotted line in Fig. 6.6) always performs worse than top-down optimization (dashed red line). When the capacity utilization of the intersection is high, strict travel time minimization also produces longer queues (see the dotted violet line). Therefore, if the two strategies (clearing long queues and minimizing travel times) are applied in isolation, they are not performing well at all. However, contrary to what one might expect, the combination of these two under-performing strategies, as it is applied in the other-regarding kind of self-organization, produces the best results (see the solid blue curve).

This is, because the other-regarding self-organization of traffic lights flexibly takes advantage of gaps that randomly appear in the traffic flow to ease congestion elsewhere. In this way, non-periodic sequences of green lights may result, which outperform the conventional periodic service of traffic lights. Furthermore, the other-regarding self-organization creates a flow-based coordination of traffic lights among neighboring intersections. This coordination spreads over large parts of the city in a self-organized way through a favorable cascade effect.

A pilot study

After our promising simulation study, Stefan Lämmer approached the public transport authority in Dresden, Germany, to collaborate with them on traffic light control. So far, the traffic center applied a state-of-the-art adaptive control scheme producing "green waves". But although it was the best system on the market, they weren't entirely happy with it. Around a busy railway station in the city center they could either produce "green waves" of motorized traffic on the main arterials or prioritize public transport, but not both. The particular challenge was to prioritize public transport while so many different tram tracks and bus lanes cut through Dresden's highly irregular road network. However, if public transport (buses and trams) would be given a green light whenever they approached an intersection, this would destroy the green wave system needed to keep the motorized traffic flowing. Inevitably, the resulting congestion would spread quickly, causing massive disruption over a huge area of the city.

When we simulated the expected outcomes of the other-regarding self-organization of traffic lights and compared it with the state-of-the art control they used, we got amazing results.[21] The waiting times were reduced for all modes of transport, dramatically for public transport and pedestrians, but also somewhat for motorized traffic. Overall, the roads were less congested, trams and buses could be prioritized, and travel times became more predictable, too. In other words, the new approach can benefit everybody (see Fig. 7) – including the environment. Thus, it is just consequential that the other-regarding self-organization approach was recently implemented at some traffic intersections in Dresden with amazing success (a 40 percent reduction in travel times). "Finally, a dream is becoming true", said one of the observing traffic engineers, and a bus driver inquired in the traffic center: "Where have all the traffic jams gone?"[22]

|

Figure 7: Improvement of intersection performance for different modes of transport achieved by other-regarding self-organization. The graph displays cumulative waiting times. Public transport has to wait 56 percent less, motorized traffic 9 percent less, and pedestrians 36 percent less.[23] |

Lessons learned

The example of self-organized traffic control allows us to draw some interesting conclusions. Firstly, in a complex dynamical system, which varies a lot in a hardly predictable way and can't be optimized in real time, the principle of bottom-up self-organization can outperform centralized top-down control. This is true even if the central authority has comprehensive and reliable data. Secondly, if a selfish local optimization is applied, the system may perform well in certain circumstances. However, if the interactions between the system's components are strong (if the traffic volume is too high), local optimization may not lead to large-scale coordination (here: of neighboring intersections). Thirdly, an "other-regarding" distributed control approach, which adapts to local needs and additionally takes into account external effects ("externalities"), can coordinate the behavior of neighboring components within the system such that it produces favorable and efficient outcomes.

In conclusion, a centralized authority may not be able to manage a complex dynamical system well because even supercomputers may not have enough processing power to identify the most appropriate course of action in real time. Compared to this, selfish local optimization will fail due to a breakdown of coordination when interactions in the system become too strong. However, an other-regarding local self-organization approach can overcome both of these problems by considering externalities (such as spillover effects). This results in a system, which is both efficient and resilient to unforeseen circumstances.

[1] See the links http://standards.ieee.org/develop/indconn/ec/ead_v1.pdf, http://standards.ieee.org/develop/indconn/ec/ead_v2.pdf, https://ethicsinaction.ieee.org

[2] http://designforvalues.tudelft.nl

[3] https://www.ams-institute.org/solution/democracy-by-design/

[4] https://www.scientificamerican.com/article/will-democracy-survive-big-data-and-artificial-intelligence/, https://techcrunch.com/2016/10/09/a-decentralized-web-would-give-power-back-to-the-people-online/

[5] see e.g. the Snowden and Wikileaks “Vault 7” revelations or the TED talk by Tristan Harris: https://www.youtube.com/watch?v=C74amJRp730

[6] M. O’Toole, D.B. Lindell, and G. Wetzstein, Nature 555, 338-341 (2018); Autonome Autos: Laser-System und Algorithmen helfen verdeckte Objekte zu erkennen, https://www.heise.de/newsticker/meldung/Autonome-Autos-Laser-System-und-Algorithmen-helfen-verdeckte-Objekte-zu-erkennen-3987149.html

[7] A. Kesting, M. Treiber, M. Schönhof, and D. Helbing (2008) Adaptive cruise control design for active congestion avoidance. Transportation Research C 16(6), 668-683 ; A. Kesting, M. Treiber, and D. Helbing (2010) Enhanced intelligent driver model to access the impact of driving strategies on traffic capacity. Phil. Trans. R. Soc. A 368(1928), 4585-4605

[8] I would like to thank Martin Treiber for providing this graphic.

[10] A. Kesting, M. Treiber, and D. Helbing (2010) Connectivity statistics of store-and-forward intervehicle communication. IEEE Transactions on Intelligent Transportation Systems 11(1), 172-181.

[11] D. Helbing and P. Molnár (1995) Social force model for pedestrian dynamics. Physical Review E 51, 4282-4286

[12] D. Helbing, S. Lämmer, and J.-P. Lebacque (2005) Self-organized control of irregular or perturbed network traffic. Pages 239-274 in: C. Deissenberg and R. F. Hartl (eds.) Optimal Control and Dynamic Games (Springer, Dordrecht)

[13] S. Lämmer (2007) Reglerentwurf zur dezentralen Online-Steuerung von Lichtsignalanlagen in Straßennetzwerken (PhD thesis, TU Dresden)

[14] S. Lämmer and D. Helbing (2008) Self-control of traffic lights and vehicle flows in urban road networks. Journal of Statistical Mechanics: Theory and Experiment, P04019, see http://iopscience.iop.org/1742-5468/2008/04/P04019; S. Lämmer, R. Donner, and D. Helbing (2007) Anticipative control of switched queueing systems, The European Physical Journal B 63(3) 341-347; D. Helbing, J. Siegmeier, and S. Lämmer (2007) Self-organized network flows. Networks and Heterogeneous Media 2(2), 193-210; D. Helbing and S. Lämmer (2006) Method for coordination of concurrent processes for control of the transport of mobile units within a network, Patent WO/2006/122528

[15] C. Gershenson and D. Helbing, When slower is faster, see http://arxiv.org/abs/1506.06796; D. Helbing and A. Mazloumian (2009) Operation regimes and slower-is-faster effect in the control of traffic intersections. European Physical Journal B 70(2), 257–274

[16] Reproduced from D. Helbing (2013) Economics 2.0: The natural step towards a self-regulating, participatory market society. Evol. Inst. Econ. Rev. 10, 3-41, with kind permission of Springer Publishers

[17] Reproduction from S. Lämmer (2007) Reglerentwurf zur dezentralen Online-Steuerung von Lichtsignalanlagen in Straßennetzwerken (Dissertation, TU Dresden) with kind permission of Stefan Lämmer, accessible at

[18] Reproduced from D. Helbing (2013) Economics 2.0: The natural step towards a self-regulating, participatory market society. Evol. Inst. Econ. Rev. 10, 3-41, with kind permission of Springer Publishers

[19] This critical length can be expressed as a certain percentage of the road section.

[20] Due to spill-over effects and a lack of coordination between neighboring intersections, selfish self-organization may cause a quick spreading of congestion over large parts of the city analogous to a cascading failure. This outcome can be viewed as a traffic-related "tragedy of the commons", as the overall capacity of the intersections is not used in an efficient way.

[21] S. Lämmer and D. Helbing (2010) Self-stabilizing decentralized signal control of realistic, saturated network traffic, Santa Fe Working Paper No. 10-09-019, see http://www.santafe.edu/media/workingpapers/10-09-019.pdf ; S. Lämmer, J. Krimmling, A. Hoppe (2009) Selbst-Steuerung von Lichtsignalanlagen - Regelungstechnischer Ansatz und Simulation. Straßenverkehrstechnik 11, 714-721

[22] Latest results from a real-life test can be found here: S. Lämmer (2015) Die Selbst-Steuerung im Praxistest, see http://stefanlaemmer.de/Publikationen/Laemmer2015.pdf

[23] Adapted reproduction from S. Lämmer and D. Helbing (2010) Self-stabilizing decentralized signal control of realistic, saturated network traffic, Santa Fe Working Paper No. 10-09-019, see

Labels:

“ethically aligned design”,

5G,

Artfiicial Intelligence,

autonomous vehicles,

decentralised,

Internet of Things

Thursday, 26 January 2017

THE GOLDEN AGE – How to Build a Better Digital Society

by Dirk Helbing (ETH Zurich/TU Delft)

Introduction:

Another Revolution or War?

PDF of article can be downloaded here

As it turns out, we are in the middle of a revolution – the digital revolution. This revolution isn’t just about technology: it will reinvent most business models and transform all economic sectors, but, it will also fundamentally change the organization of our society. The best way to imagine this transition may be the metamorphosis of a caterpillar into a butterfly. In a few years, the world will look very different…

This does perhaps

sound exaggerated – but then again, probably not. We are now seeing a perfect

storm that has been created by the confluence of many powerful new digital

technologies. This includes social media, cloud storage and cloud computing,

Big Data, Artificial Intelligence and cognitive computing, robotics, 3D

printing, the Internet of Things, Blockchain Technology, and Virtual

Reality. Smartphones are just one example of a technology deemed science

fiction two decades ago, but is now real and ubiquitous. Many people cannot

imagine an existence without these technologies anymore.

The digital

technologies described above now reorganize our world. Companies such as

Microsoft, Apple, Google, Amazon and Facebook, established 15 to 40 years ago, often

in garages by students without academic degrees, are now among the most

valuable companies of the world. By way of disruptive innovations, these

technology giants have overtaken the traditional large corporations in the oil

or car industries.

The company AirBnb

is now challenging the hotel business. Uber is troubling the taxi, transport

and logistics sectors. Bitcoin, the digital currency, threatens the big banks.

With its fashionable electric cars, Tesla makes classical car companies look

out-dated. Google plans to replace today’s individual vehicle traffic by

promoting “transport as a service” based on self-driving cars. We may soon have

the same level of mobility that we have today while using only 15 percent of

today’s vehicles. Parking lots, garages, traffic police and more, may soon be things

of the past. 3D printers enable the cheap production of personalized products.

In perspective, global mass production will be gradually replaced by

individually customized, locally created products.

These are just some

of the notable trends. However, anyone who thinks that the digital revolution

is just about faster Internet, smarter devices, more data, better services, and

new business models largely underestimates the “creative destruction” that

comes along with digital technologies.

Thanks to new

machine learning approaches such as “deep learning”, recent progress in the

area of Artificial Intelligence has been remarkable. These systems learn by

themselves, and they are getting smarter at an exponentially accelerating pace.

By now, intelligent computer algorithms can perform as well as humans in terms

of reading text, understanding spoken language, or recognizing patterns. They

can also learn repetitive and rule-based procedures. They tend to make less

mistakes, do not get tired, and do not complain. They also do not have to pay

taxes. In other words, it is only a matter of time until they will replace

human jobs. This will also hit many middle-class jobs. Later on, however, I

will explain how we can turn this into an opportunity.

Some people hope

that, for each job lost, a new one will be created, and that these jobs will be

better than those today as economic progress advances. However, people may forget

that the transition from the agricultural to the industrial society, as well as

the transition from there to the service society, was accompanied by serious

financial and economic crises, by revolutions and wars. Many countries are already

in the midst of a financial and economic crisis, and in some countries, the

unemployment rate of young people has passed 50 percent. In many places, the

effective incomes in the lower and middle class have been stagnant or

decreasing, while the level of inequality has grown dramatically.

In 2016, Oxfam

revealed that 50 percent of the world’s property was in the hands of 62 people.

By 2017, half of the world’s property had ended up in the hands of only 8

people. Four of them are Americans running big IT companies. In the meantime,

the level of inequality is comparable to the situation before the French

revolution. This implies that the purchasing power of people is eroding, such

that further economic development is obstructed. Consumers can no longer afford

buying all the products that companies can provide. Many companies and banks could

cease to exist, and the richest segment of people (“the elite”) start to worry

about an impending revolution. The current situation is highly unstable and

does not seem to serve anyone well. The rise of populism is one result of this.

So it appears that

the current world order is breaking down. Even though we have better

technologies and more data than ever before, these have been accompanied by

increased global challenges. As the world becomes more networked, systemic

complexity is growing faster than the data available to describe it, and the

amount of data is accumulating faster than the data we can process and

transmit. Paradoxically, even though technological solutions are more powerful

than ever, attempts to control the world in a top-down way appear to fail. At

the World Economic Forum in 2017, representatives of the elites could no longer

deny the impending crisis. The success principles of the past – globalization,

optimization and regulation – did not seem to work well nor to persuade people

anymore. After president Trump’s inauguration, the German Minister of Exterior,

Frank-Walter Steinmeier, and chancellor Angela Merkel both announced the end of

a historical era. This may be the end of globalism and capitalism as we know

it.

There are several

reasons for these developments, and they are all based on self-created problems.

The first reason is that, as we go on networking the world, the complexity is

increasing factorially, at an amazing pace. Importantly, in a highly connected

world, the intended effects will often not result because of side effects,

feedback effects, domino effects, and cascading effects. Cascading effects, in

particular, can easily get out of hand through a series of coupled events,

which may end disastrously. Electrical blackouts are one example.

The second reason

is the attempt to steer each individual’s behaviour (I will discuss this later

in detail). Today, those who run our societies change their “commands” at the

same speed at which individuals are trying to adjust to them. This undermines a

hierarchical organization, which requires a slower speed of change on the

controlling “top” level as compared to the controlled “bottom” level. Natural

hierarchies exemplify this, as they occur in physics (atoms, molecules, solid

bodies, planets, solar systems, galaxies) and biology (cells, organs,

organisms, social communities, organizations, societies).

We are living in

times where our employers, the state, and companies are all the time trying to

make us do all sorts of things. This obviously causes a fragmentation of

attention and action, distraction and chaos. This will be elaborated in more

detail later. One result of this development is, that we are increasingly

locked in informational filter bubbles or personalized echo chambers. This

makes us lose our ability to understand other points of view, or to find

reasonable compromises and consensus. Consequently, conflict and extremism in

our societies have increased, which in turn undermines social cohesion. One

might say that our society is increasingly divided into social atoms, which are

now trying to find new bonds. Populism is a side effect of this.

I must stress that this has created a

highly dangerous situation, which has the potential to collapse today’s social

order and also that of the world. This development was predicted years ago, but

little has been done to stop the underlying cascading effects. Now that we are

at the tipping point, this means that our future is more uncertain and

unpredictable than ever. As a consequence, people may lose orientation. For

example, we may end up with a data-driven version of fascism (a big brother

society or “brave new world”), or of communism (a “benevolent dictatorship”

that believes it knows what would be best for everyone and imposes this on us),

or of feudalism (a “surveillance capitalism” that serves us according to our

“personal value”, as measured by a “citizen score”).

However, we may also make the

conscious decision to upgrade democracy and capitalism as we know it, by digital

means. On the

whole, standing at a tipping point creates unprecedented opportunities for

mankind to re-invent society, and to build a better world. However, if we

continue as before, we may stand to experience the breakout of large-scale

wars. Such wars are quite likely, and they may break out for several reasons:

- The new wave of automation driven by artificial intelligence and robotics may cause unemployment to sky-rocket, and undermine the stability of societies, which can easily lead to a war.

- The spread of populism and nationalism implies dangers for peace as well, as it often values people of different ethnic origin and cultural background differently. Cultures that consider themselves superior, however, tend to wage wars against those they consider inferior. This tendency is further nourished by the claim that “war is the mother of invention” (Heraclitus even said, “of everything”).

- The probability that we may see a financial and economic collapse is also quite high. In fact, the Limit to Growth study, which tries to anticipate the future fate of our planet, predicts such collapse in the imminent future. The on-going financial crisis makes it clear that capitalism as we know it, is failing. Mass unemployment in many countries, public and private debt levels, low economic growth rates and negative interest rates indicate that we have reached a point of no return. At the 2017 Davos meeting, many have concluded that capitalism 1.0 is losing support – it will (have to) be replaced by something else.

- Impending future resource shortages will cause further problems, which can also precipitate a war.

- Climate change could instigate wars as well, namely by desertification, the deterioration of once fruitful soil, or natural disasters (e.g. floods). It is also expected that climate change may cause the largest loss of species since the extinction of the dinosaurs, which may undermine our ecosystem and our food chain.

- Furthermore, we may see cultural or religious clashes, and this seems to be already happening.

- A worrying concern is poor maturity in the constructive use of social media and digital technologies. Shit storms, hate speech, and fake news are illustrations of this. We have to look back in history to the days when the printing press was invented. One result was the 30-year war that claimed the lives of 5-8 million people. Similarly, the invention of the radio was an important factor in the success of the Nazi regime. Radio enabled the spread of propaganda on a previously unprecedented scale, from which people were not able to distance themselves enough. This result was the Holocaust and World War II, which claimed up to 80 million lives. Today social media news has become the battlefield of a world-wide disinformation war. Shit storms, hate speech, and fake news appear to get out of hand. How will this play out?

In conclusion,

there are multiple factors present that can contribute towards large-scale wars.

Given the “nuclear overkill”, this could claim an unprecedented number of

victims and, in all likelihood, make large swathes of our planet uninhabitable.

Should this happen, it would be the most shameful event of human history, and

we would never be able to believe in human values or civilisation again.

What makes me

optimistic, however, is the fact that we know about all these dangers and we have

history to learn from.

Solving the above problems

is not a trivial exercise. However, new solutions have been recently proposed.

The crucial issue for us is whether these new ideas spread quickly enough, and

whether politics will have the courage to implement them now.

Making the right

decisions and taking appropriate actions has become a matter of life and death.

If we go on as before, we will probably experience a number of global disasters.

I am convinced, however, that the time to create a framework for global

prosperity and peace has come.

In the following

chapters, I will describe the issues with our current socio-economic approach in

further detail, and why it is outdated and destined to fail. I will also present

an alternative vision of a better future for everyone – one that is based on the

success principles of co-creation, co-evolution, collective intelligence, and

self-organization. I am convinced that these principles are able to lift our

economy and society to the next level. This should make society more resilient

to unexpected developments and shocks (sometimes called “black swans”). Without

a doubt, we have arrived at a crossroad. The question we must now ask ourselves

is: Will we have the courage follow a new path, or will we continue our old path

and fall off the cliff?

(Comments and

inputs to dhelbing@ethz.ch are

welcome!)

Labels:

Apple,

artificial intelligence,

complexity,

deep learning,

Dirk Helbing,

Facebook,

filter bubbles,

Google,

Internet of Things,

populism,

Tesla,

World Economic Forum

Subscribe to:

Posts (Atom)